一、简介

文档:https://kubernetes.io/zh/docs/home/

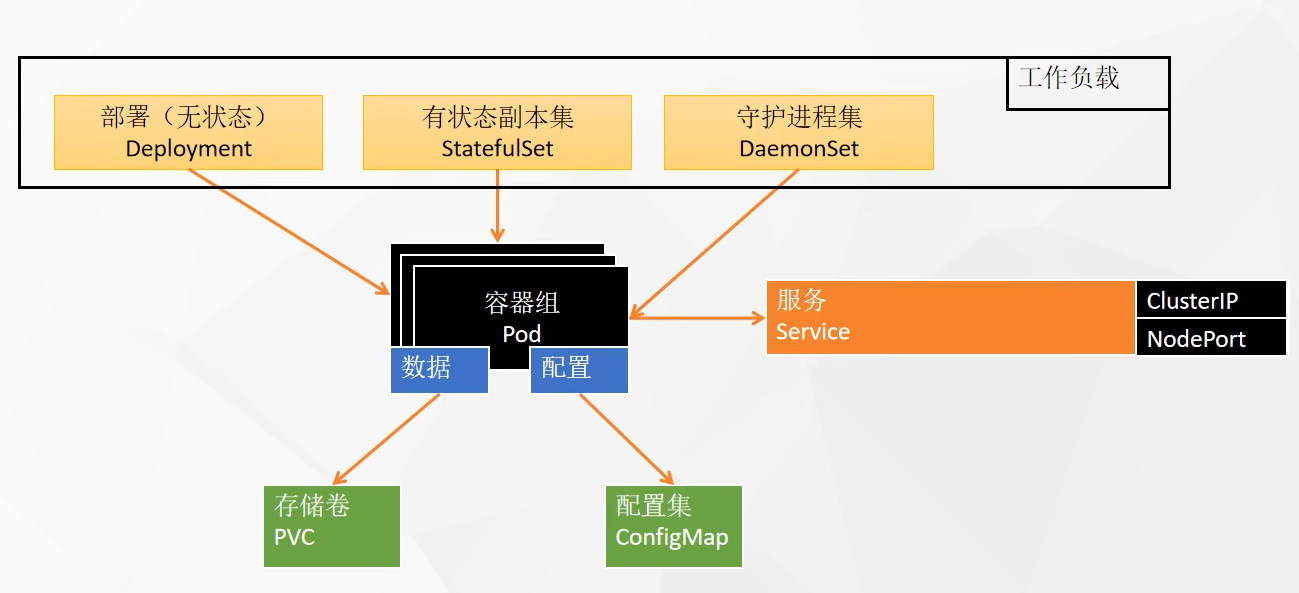

架构:

minikube

https://github.com/kubernetes/minikube

来由

传统部署:物理服务器运行应用,应用资源分配不合理,某应用可能占用大部分资源,其他应用性能下降

虚拟化部署:虚拟机进行应用隔离,更好的利用资源,但是虚拟机虚拟化了所有硬件组件,还有操作系统

容器化部署:类似虚拟机,具有自己的文件系统、CPU、内存等,轻量级,创建快速,持续开发继承和部署,资源隔离,资源利用合理,跨开发、测试生产环境一致,可观察操作系统级别的信息和应用信息等

容器故障,需要重启,保证不停机,Kubernetes

- 服务发现和负载均衡

- DNS名称和IP地址公开容器,如果进入容器的流量很大,可以负载均衡分配网络流量

- 存储编排

- 自动挂载自己的存储系统:本地存储、公共云提供商

- 自动部署和回滚

- 自动装箱计算:允许的CPU和RAM

- 自我修复:重新不正常的容器的容器

- 密钥和配置管理:允许存储和管理敏感信息:密码、令牌、ssh密钥

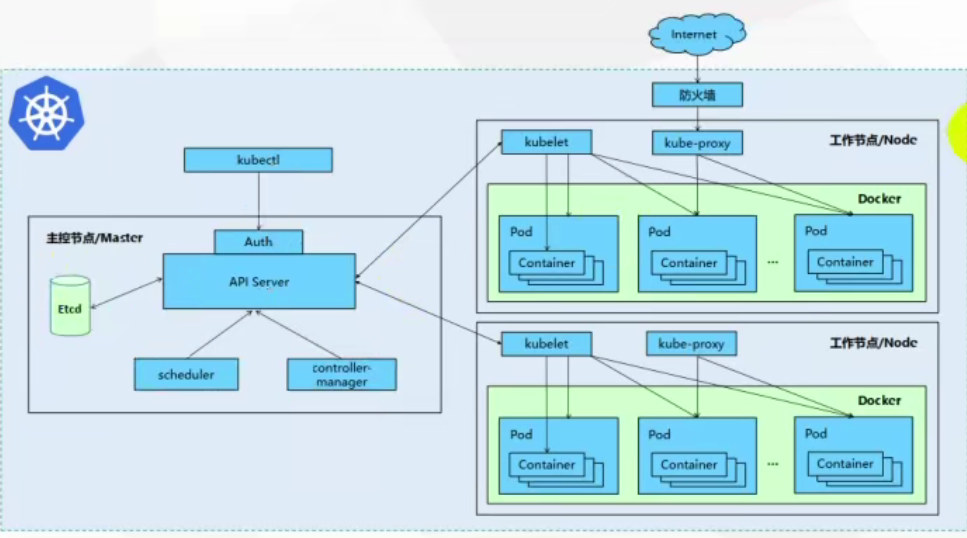

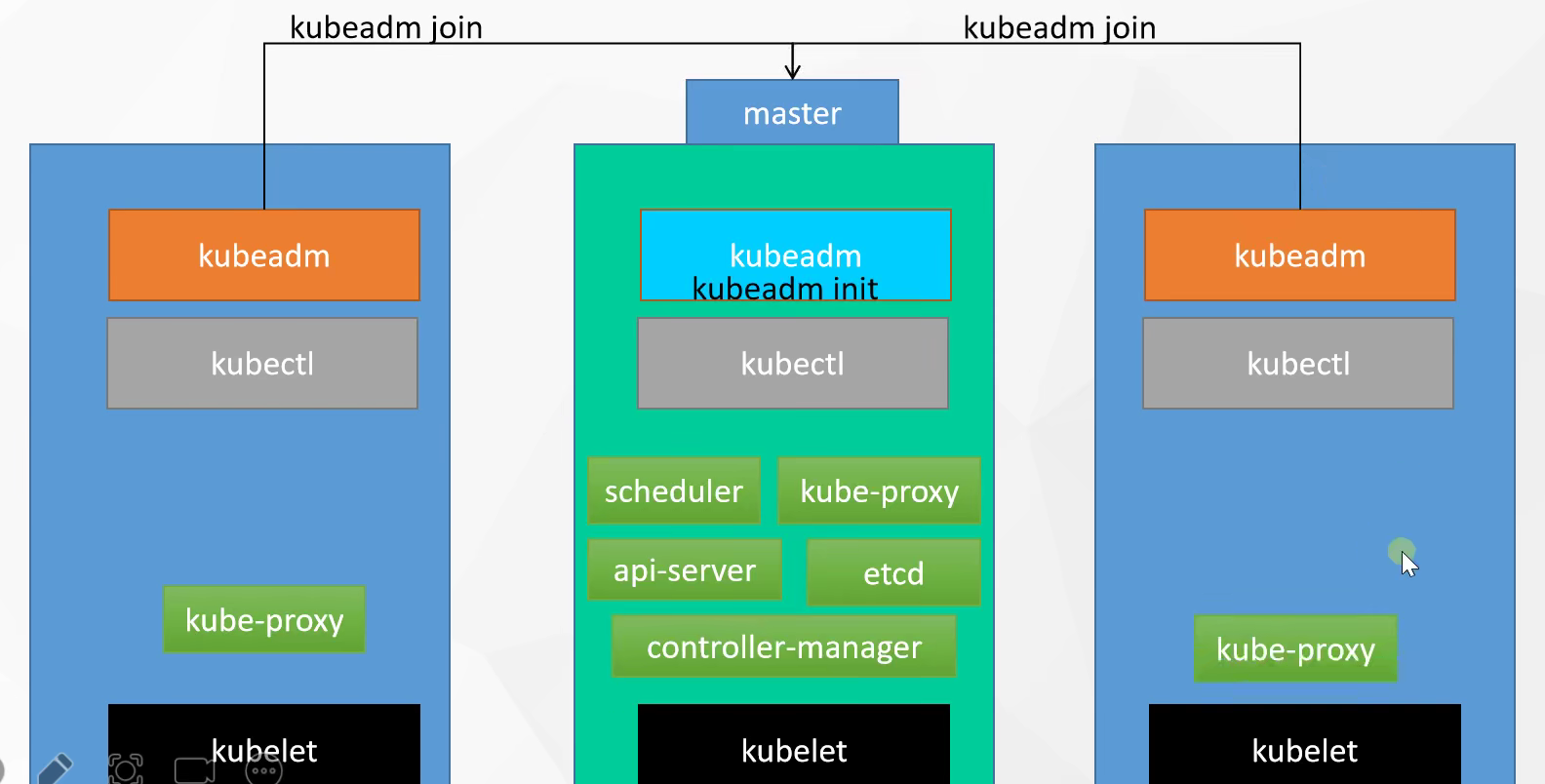

架构

组件

集群由一组节点机器组成,至少一个工作节点

控制平面组件

控制平面组件(Control Plane Components)对集群做全局决策

一般同一计算机启动所有控制平面组件

Kube-apiserver

公开Kubernets API,k8s控制面的前端

etcd

键值数据库,一致性、高可用性,保存集群数据的后台数据库

kube-scheduler

监听新创建的、未指定运行节点(Node)的Pods,选择节点在Pod上运行

Kube-controller-manager

节点控制器:节点出现故障通知响应

任务控制器:检测一次性任务的Job对象,创建pod运行这些任务至完成

端点控制器:填充端点对象,加入service和pod

服务账户和令牌控制器:为新的命令空间创建默认账户和Api访问令牌

kubelet

负责维护容器的生命周期,同时也负责 Volume 和网络的管理

Container runtime

负责镜像管理以及 Pod 和容器的真正运行

Node组件

kubelet

每个节点上运行的代理,确保容器都运行在pod中

kube-proxy

每个节点上的网络代理,维护节点上的网络规则,允许从集群内部和外部的网络会话与Pod进行网络通信

插件

CoreDNS

- 可以为集群中的 SVC 创建一个域名 IP 的对应关系解析的 DNS 服务

Dashboard

- 给 K8s 集群提供了一个 B/S 架构的访问入口

Ingress Controller

- 官方只能够实现四层的网络代理,而 Ingress 可以实现七层的代理

Prometheus

- 给 K8s 集群提供资源监控的能力

Federation

- 提供一个可以跨集群中心多 K8s 的统一管理功能,提供跨可用区的集群

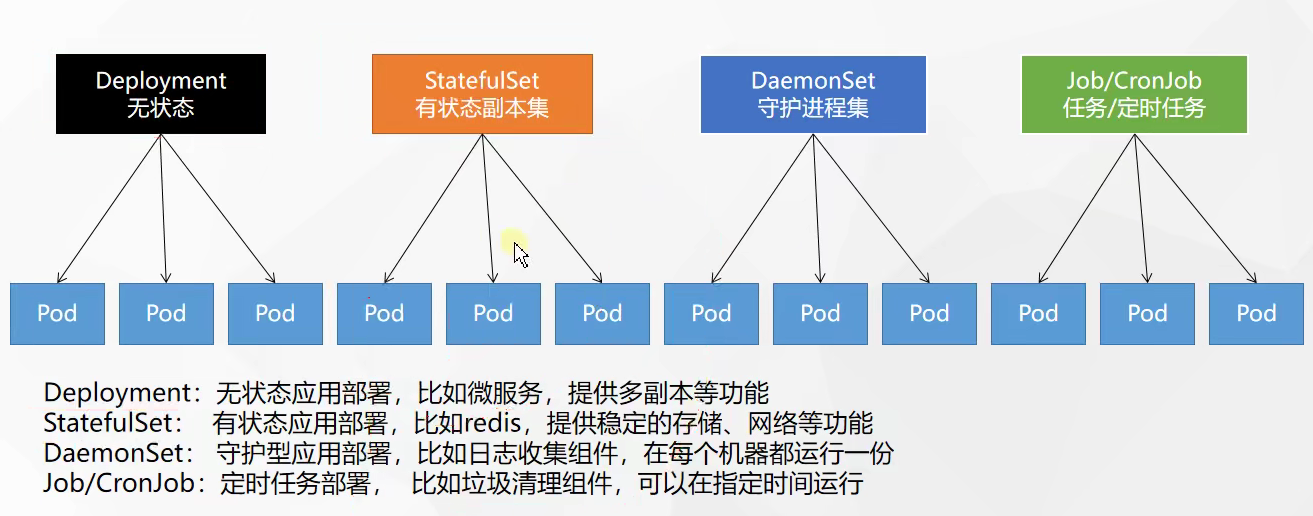

基本对象

- Pod -> 集群中的基本单元

- daemon sets:每个机器都放

- statefull sets:有状态应用

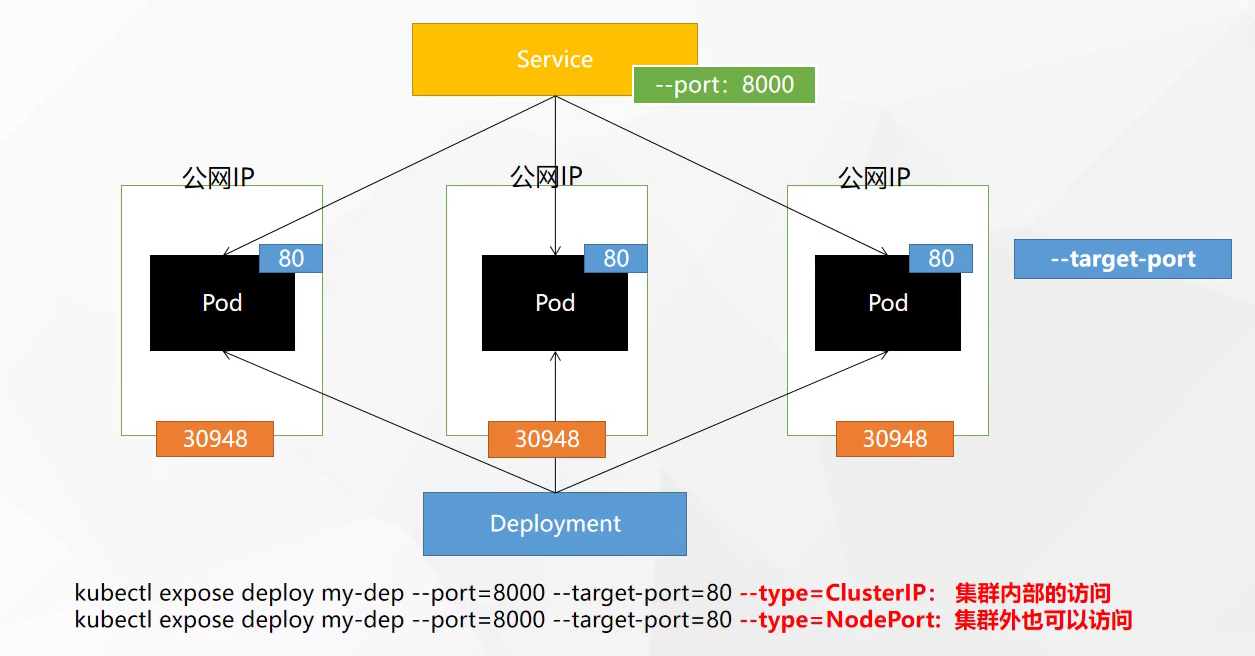

- Service -> 解决如何访问 Pod 里面服务的问题,统一应用访问入口

- Volume -> 集群中的存储卷

- Namespace -> 命名空间为集群提供虚拟的隔离作用

二、安装

virtualbox

https://www.virtualbox.org/wiki/Downloads

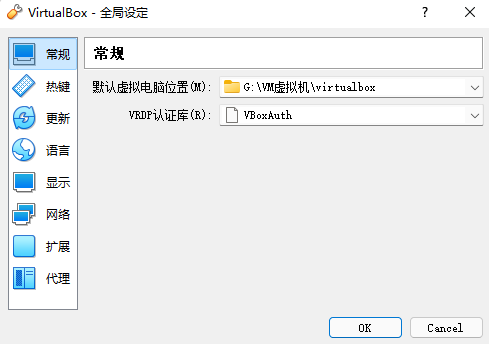

打开virtualbox软件,点击管理打开全局配置配置box的新路径

网络配置

DNS解析

vi /etc/sysconfig/network-scripts/ifcfg-eth0

DNS1="114.114.114.114"

service network restart虚拟机网卡

原来

nat地址转换,虚拟机和本地都能访问互联网,同一个IP,通过端口转发

仅主机网络,虚拟机内部共享网络,方便能够使用开发工具连接

NAT网络,k8s,局域网

如果使用网络地址转换,ip地址一样,出现问题

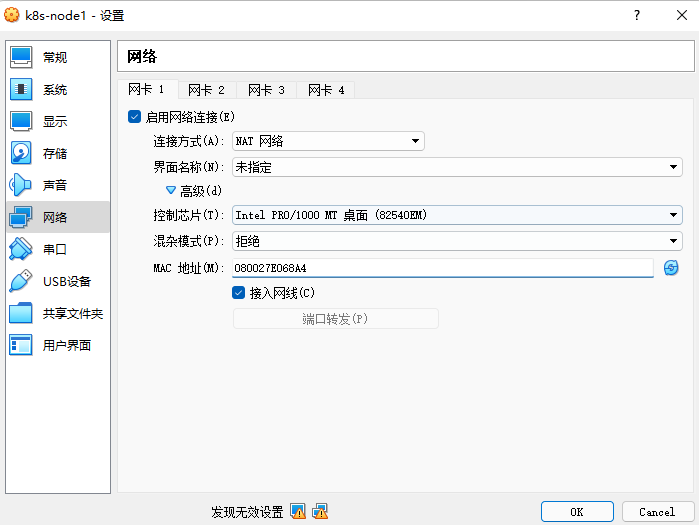

全局创建NAT网络

单个虚拟机网卡一改成NAT网络,重新生成MAC地址

文件位置

不能有中文!

选中3个无界面启动

10.0.2.6/24

10.0.2.15/24

10.0.2.5/24

ping 10.0.2.6 #试试看

ping baidu.com防火墙等

# 右键所有发送命令,关闭防火墙,安全策略,内存交换

systemctl stop firewalld

systemctl disable firewalld

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

cat /etc/selinux/config

swapoff -a #(临时关,重启还有)

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久

free -g #验证,swap必须为0

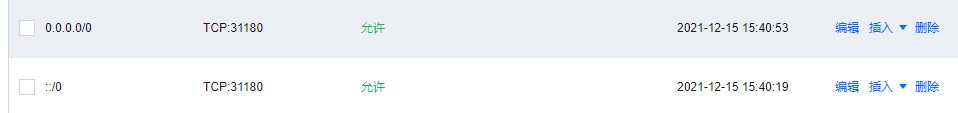

| 服务 | 协议 | 行为 | 起始端口 | 结束端口 | 备注 |

| ssh | TCP | allow | 22 | ||

| etcd | TCP | allow | 2379 | 2380 | |

| apiserver | TCP | allow | 6443 | ||

| calico | TCP | allow | 9099 | 9100 | |

| bgp | TCP | allow | 179 | ||

| nodeport | TCP | allow | 30000 | 32767 | |

| master | TCP | allow | 10250 | 10258 | |

| dns | TCP | allow | 53 | ||

| dns | UDP | allow | 53 | ||

| local-registry | TCP | allow | 5000 | 离线环境需要 | |

| local-apt | TCP | allow | 5080 | 离线环境需要 | |

| rpcbind | TCP | allow | 111 | 使用 NFS 时需要 | |

| ipip | IPENCAP / IPIP | allow | Calico 需要使用 IPIP 协议 | ||

| metrics-server | TCP | allow | 8443 |

主机名和IP地址对应关系【可能改变】

ip addr

vi /etc/hosts

10.0.2.6 k8s-node1

10.0.2.15 k8s-node2

10.0.2.5 k8s-node3

# 如果需要指定主机名

hostnamectl set-hostname newhostname

newhostname为新的hostname

cat /etc/hosts流量

#将桥接的ipv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

EOF

sysctl --systemVagrant

镜像地址

# 尝试

setx VAGRANT_HOME "E:\vm\virtualbox" /M

# 单个下载

# centos

vagrant init centos7 https://mirrors.ustc.edu.cn/centos-cloud/centos/7/vagrant/x86_64/images/CentOS-7.box

# ubuntu

vagrant init ubuntu-bionic https://mirrors.tuna.tsinghua.edu.cn/ubuntu-cloud-images/bionic/current/bionic-server-cloudimg-amd64-vagrant.box

vagrant upVagrantFile

记得设置虚拟机nat网络

扩容:vagrant plugin install vagrant-disksize

➜ ~ sudo fdisk -l

➜ ~ sudo fdisk /dev/sda

# 按p显示分区表,默认是 sda1 和 sda2。

# 按n新建主分区。

# 按p设置为主分区。

# 输入3设置为第三分区。

# 输入两次回车设置默认磁盘起始位置。

# 输入t改变分区格式

# 输入3选择第三分区

# 输入8e格式成LVM格式

# 输入w执行Vagrant.configure("2") do |config|

(1..3).each do |i|

config.vm.define "k8s-node#{i}" do |node|

# 设置虚拟机的Box

node.vm.box = "centos/7"

config.vm.box_url = "https://mirrors.ustc.edu.cn/centos-cloud/centos/7/vagrant/x86_64/images/CentOS-7.box"

config.disksize.size = "100GB"

# 设置虚拟机的主机名

node.vm.hostname="k8s-node#{i}"

# 设置虚拟机的IP

node.vm.network "private_network", ip: "192.168.56.#{99+i}", netmask: "255.255.255.0"

# 设置主机与虚拟机的共享目录

# node.vm.synced_folder "~/Documents/vagrant/share", "/home/vagrant/share"

# VirtaulBox相关配置

node.vm.provider "virtualbox" do |v|

# 设置虚拟机的名称

v.name = "k8s-node#{i}"

# 设置虚拟机的内存大小

v.memory = 4096

# 设置虚拟机的CPU个数

v.cpus = 4

end

end

end

end命令

vagrant up

vagrant ssh k8s-node1

# 初始化

su root

vagrant

vi /etc/ssh/sshd_config

# 修改为

#PasswordAuthentication no

PasswordAuthentication yes

service sshd restart

sudo passwd root

exit

exit可能还得修改虚拟机网卡,

ip192.168.56.100

Docker

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

sudo yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

sudo yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum list docker-ce.x86_64 --showduplicates |sort -r

yum install -y docker-ce-18.06.3.ce-3.el7 #安装指定版本

# sudo yum install -y docker-ce docker-ce-cli containerd.io # 不指定版本就报错

sudo mkdir -p /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"registry-mirrors": ["https://kpw8rst3.mirror.aliyuncs.com"]

}

EOF

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://kpw8rst3.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

systemctl enable docker

docker ps -a

基础程序

- kubeadm:用来初始化集群的指令。

- kubelet:在集群中的每个节点上用来启动 Pod 和容器等。

- kubectl:用来与集群通信的命令行工具。

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 检查是否有:

yum list|grep kube

# 安装:

yum install -y kubelet-1.17.3 kubeadm-1.17.3 kubectl-1.17.3

# 自启:

systemctl enable kubelet

systemctl start kubelet

# 暂时运行不起来的,都等待加入,master之后才行

systemctl status kubeletkubectl

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --clientk8s-master【可能改变】

vim master_images.sh

#!/bin/bash

images=(

kube-apiserver:v1.17.3

kube-proxy:v1.17.3

kube-controller-manager:v1.17.3

kube-scheduler:v1.17.3

coredns:1.6.5

etcd:3.4.3-0

pause:3.1

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

done

# 多个组件

chmod 700 master_images.sh

./master_images.sh

# 需要修改成本机的地址

kubeadm init \

--apiserver-advertise-address=10.0.2.6 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.17.3 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16

# error

# docker版本不匹配

# 控制台打印的

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# !!!!!!!! 控制台打印的,需要网络,先下载下面的【flannel】

kubeadm join 10.0.2.4:6443 --token a0l0br.8ucwd8fc5uidq6xv \

--discovery-token-ca-cert-hash sha256:fe39185433efc499546acc5dcd4e9a8cb9b95134170ff4a379e4d8888dd8a548

# 出错

可能是 node 时间不对

# kubeadm reset 重启

# rm -rf $HOME/.kube

# 如果实在不行

#kubeadm join *** --ignore-preflight-errors=alltoken过期

网络插件

## 无法访问

# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 使用资源中的kube-flannel.yml,pod的安全策略,集群配置,每个节点都要运行,flannel下载不下来就去dockerhub替换 或者去码云搜flannel

# quay.io/coreos/flannel:v0.11.0-arm64 -> jmgao1983/flannel:v0.11.0-amd64

kubectl apply -f kube-flannel.yml

# delete

kubectl get pods -A

# kube-flannel-ds-amd64-hrrg6 确保运行中

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f9c544f75-z68bl 0/1 Pending 0 4m55s

kube-system coredns-7f9c544f75-z75jq 0/1 Pending 0 4m55s

kube-system etcd-k8s-node1 1/1 Running 0 4m51s

kube-system kube-apiserver-k8s-node1 1/1 Running 0 4m51s

kube-system kube-controller-manager-k8s-node1 1/1 Running 0 4m51s

kube-system kube-flannel-ds-amd64-dw6ss 1/1 Running 0 16s

kube-system kube-proxy-w2n27 1/1 Running 0 4m55s

kube-system kube-scheduler-k8s-node1 1/1 Running 0 4m51s

# 集群节点 ready

kubectl get nodes

kubectl get pods --all-namespaces

# kube-flannel-ds-amd64-hrrg6 确保运行中

# 再回去复制运行到 【work】 服务器

kubeadm join 10.0.2.6:6443 --token tvf99z.wsd4ocawda7clok3 \

--discovery-token-ca-cert-hash sha256:9bf9e1948f7300652e87d530b0750b5b9520f87172e7658a2b9543a86f26c6a7

# 失败,可能是时间不同步

ntpdate cn.pool.ntp.org

date

[root@k8s-node1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready master 9m48s v1.17.3

k8s-node2 Ready <none> 2m9s v1.17.3

k8s-node3 Ready <none> 2m9s v1.17.3

notReady 网络插件都要配置

# 集群的pod

kubectl get pod -n kube-system -o wide

# 等待都running

kube-flannel-ds-amd64-6g8ln 1/1 Running 0 88s 10.0.2.5 k8s-node3 <none> <none>

kube-flannel-ds-amd64-jjvxc 1/1 Running 0 31m 10.0.2.6 k8s-node1 <none> <none>

kube-flannel-ds-amd64-kzxcb 1/1 Running 0 91s 10.0.2.15 k8s-node2 <none> <none>flannel.yaml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: quay.io/coreos/flannel:v0.15.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.15.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

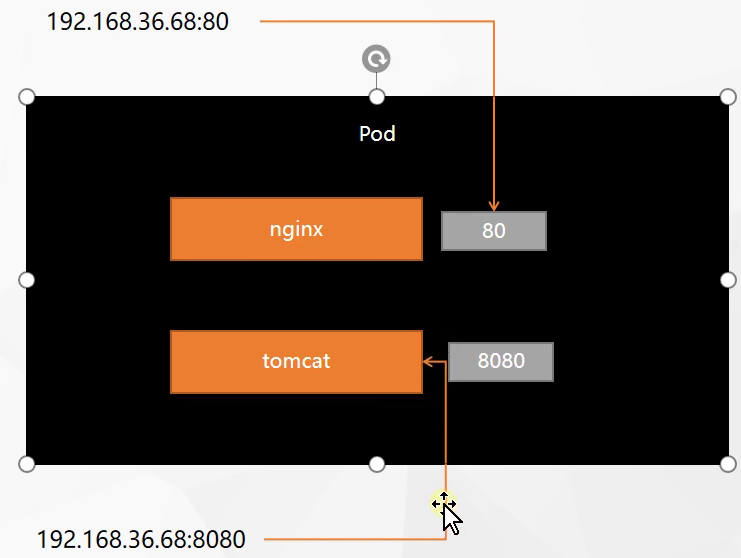

name: kube-flannel-cfgIngress

控制service网络,统一对外暴露端口,由nginx实现

DaemonSet:每一个服务器都要有ingress controller

yaml

kubectl apply -f ingress-controller.yaml定义名称空间,账户信息,rbac,daemonset(每一个放服务器都会有)

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

hostNetwork: true

serviceAccountName: nginx-ingress-serviceaccount

containers:

- name: nginx-ingress-controller

image: siriuszg/nginx-ingress-controller:0.20.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

---

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

spec:

#type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginxkubectl apply -f ingress-tomcat6.yaml

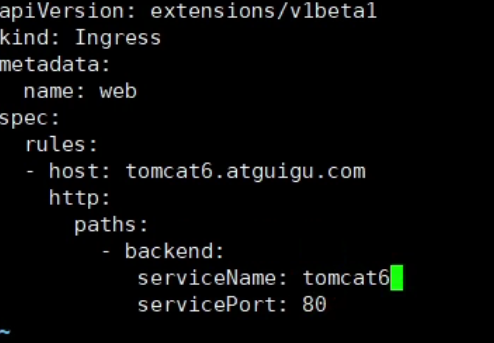

Tomcat使用

通过域名控制

ingress-tomcat6.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: web

spec:

rules:

- host: tomcat6.atguigu.com

http:

paths:

- backend:

serviceName: tomcat6

servicePort: 80

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: web-ingress

namespace: mingyue

spec:

rules:

- host: mingyuetest.cn

http:

paths:

- path: /

backend:

serviceName: psedu-admin-front

servicePort: 80 #service的端口servicePort: 80 #service的端口

- path: /prod-api

backend:

serviceName: psedu-gateway

servicePort: 8080

kubectl apply -f ingress.yaml

kubectl get ingress

minikube

# root

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

install minikube-linux-amd64 /usr/local/bin/minikube

# error

useradd tomx

passwd tomx #设置密码

usermod -aG docker tomx && newgrp docker

su tomx #切换用户

# 不使用docker

yum install epel-release

yum install conntrack-tools

minikube start --image-mirror-country=cn --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --vm-driver=none

# 下载kubectl

minikube kubectl -- get po -A

dashboard

默认

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque

---

# ------------------- Dashboard Service Account ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Role & Role Binding ------------------- #

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

---

# ------------------- Dashboard Deployment ------------------- #

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

# ------------------- Dashboard Service ------------------- #

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard暴露NodeIp:30001

需要令牌命令

StorageClass

貌似无法使用

#需要有默认的storageClass

kubectl get sc

# 文档https://v2-1.docs.kubesphere.io/docs/zh-CN/appendix/install-openebs/

kubectl get node -o wide

kubectl describe node k8s-node1 | grep Taint

# Taints: node-role.kubernetes.io/master:NoSchedule

# 需要去掉 Taints

kubectl taint nodes k8s-node1 node-role.kubernetes.io/master:NoSchedule-

# openebs

kubectl create ns openebs

# 已经失效,使用下面的

kubectl apply -f https://openebs.github.io/charts/openebs-operator-1.5.0.yaml

kubectl apply -f openebs.yaml

# 查看 https://blog.csdn.net/RookiexiaoMu_a/article/details/119859930

# 等待运行成功

kubectl get pod --all-namespaces

kubectl get sc

# 设置默认的storageClass

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

# 查看 OpenEBS 相关 Pod 的状态,若 Pod 的状态都是 running,则说明存储安装成功

kubectl get pod -n openebs

# 恢复 Taints

kubectl taint nodes k8s-node1 node-role.kubernetes.io/master=:NoScheduleopenebs-operator

#

# DEPRECATION NOTICE

# This operator file is deprecated in 2.11.0 in favour of individual operators

# for each storage engine and the file will be removed in version 3.0.0

#

# Further specific components can be deploy using there individual operator yamls

#

# To deploy cStor:

# https://github.com/openebs/charts/blob/gh-pages/cstor-operator.yaml

#

# To deploy Jiva:

# https://github.com/openebs/charts/blob/gh-pages/jiva-operator.yaml

#

# To deploy Dynamic hostpath localpv provisioner:

# https://github.com/openebs/charts/blob/gh-pages/hostpath-operator.yaml

#

#

# This manifest deploys the OpenEBS control plane components, with associated CRs & RBAC rules

# NOTE: On GKE, deploy the openebs-operator.yaml in admin context

# Create the OpenEBS namespace

apiVersion: v1

kind: Namespace

metadata:

name: openebs

---

# Create Maya Service Account

apiVersion: v1

kind: ServiceAccount

metadata:

name: openebs-maya-operator

namespace: openebs

---

# Define Role that allows operations on K8s pods/deployments

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: openebs-maya-operator

rules:

- apiGroups: ["*"]

resources: ["nodes", "nodes/proxy"]

verbs: ["*"]

- apiGroups: ["*"]

resources: ["namespaces", "services", "pods", "pods/exec", "deployments", "deployments/finalizers", "replicationcontrollers", "replicasets", "events", "endpoints", "configmaps", "secrets", "jobs", "cronjobs"]

verbs: ["*"]

- apiGroups: ["*"]

resources: ["statefulsets", "daemonsets"]

verbs: ["*"]

- apiGroups: ["*"]

resources: ["resourcequotas", "limitranges"]

verbs: ["list", "watch"]

- apiGroups: ["*"]

resources: ["ingresses", "horizontalpodautoscalers", "verticalpodautoscalers", "certificatesigningrequests"]

verbs: ["list", "watch"]

- apiGroups: ["*"]

resources: ["storageclasses", "persistentvolumeclaims", "persistentvolumes"]

verbs: ["*"]

- apiGroups: ["volumesnapshot.external-storage.k8s.io"]

resources: ["volumesnapshots", "volumesnapshotdatas"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: ["apiextensions.k8s.io"]

resources: ["customresourcedefinitions"]

verbs: [ "get", "list", "create", "update", "delete", "patch"]

- apiGroups: ["openebs.io"]

resources: [ "*"]

verbs: ["*" ]

- apiGroups: ["cstor.openebs.io"]

resources: [ "*"]

verbs: ["*" ]

- apiGroups: ["coordination.k8s.io"]

resources: ["leases"]

verbs: ["get", "watch", "list", "delete", "update", "create"]

- apiGroups: ["admissionregistration.k8s.io"]

resources: ["validatingwebhookconfigurations", "mutatingwebhookconfigurations"]

verbs: ["get", "create", "list", "delete", "update", "patch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

- apiGroups: ["*"]

resources: ["poddisruptionbudgets"]

verbs: ["get", "list", "create", "delete", "watch"]

---

# Bind the Service Account with the Role Privileges.

# TODO: Check if default account also needs to be there

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: openebs-maya-operator

subjects:

- kind: ServiceAccount

name: openebs-maya-operator

namespace: openebs

roleRef:

kind: ClusterRole

name: openebs-maya-operator

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: maya-apiserver

namespace: openebs

labels:

name: maya-apiserver

openebs.io/component-name: maya-apiserver

openebs.io/version: 2.12.0

spec:

selector:

matchLabels:

name: maya-apiserver

openebs.io/component-name: maya-apiserver

replicas: 1

strategy:

type: Recreate

rollingUpdate: null

template:

metadata:

labels:

name: maya-apiserver

openebs.io/component-name: maya-apiserver

openebs.io/version: 2.12.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: maya-apiserver

imagePullPolicy: IfNotPresent

image: openebs/m-apiserver:2.12.0

ports:

- containerPort: 5656

env:

# OPENEBS_IO_KUBE_CONFIG enables maya api service to connect to K8s

# based on this config. This is ignored if empty.

# This is supported for maya api server version 0.5.2 onwards

#- name: OPENEBS_IO_KUBE_CONFIG

# value: "/home/ubuntu/.kube/config"

# OPENEBS_IO_K8S_MASTER enables maya api service to connect to K8s

# based on this address. This is ignored if empty.

# This is supported for maya api server version 0.5.2 onwards

#- name: OPENEBS_IO_K8S_MASTER

# value: "http://172.28.128.3:8080"

# OPENEBS_NAMESPACE provides the namespace of this deployment as an

# environment variable

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# OPENEBS_SERVICE_ACCOUNT provides the service account of this pod as

# environment variable

- name: OPENEBS_SERVICE_ACCOUNT

valueFrom:

fieldRef:

fieldPath: spec.serviceAccountName

# OPENEBS_MAYA_POD_NAME provides the name of this pod as

# environment variable

- name: OPENEBS_MAYA_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# If OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG is false then OpenEBS default

# storageclass and storagepool will not be created.

- name: OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG

value: "true"

# OPENEBS_IO_INSTALL_DEFAULT_CSTOR_SPARSE_POOL decides whether default cstor sparse pool should be

# configured as a part of openebs installation.

# If "true" a default cstor sparse pool will be configured, if "false" it will not be configured.

# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG

# is set to true

- name: OPENEBS_IO_INSTALL_DEFAULT_CSTOR_SPARSE_POOL

value: "false"

# OPENEBS_IO_INSTALL_CRD environment variable is used to enable/disable CRD installation

# from Maya API server. By default the CRDs will be installed

# - name: OPENEBS_IO_INSTALL_CRD

# value: "true"

# OPENEBS_IO_BASE_DIR is used to configure base directory for openebs on host path.

# Where OpenEBS can store required files. Default base path will be /var/openebs

# - name: OPENEBS_IO_BASE_DIR

# value: "/var/openebs"

# OPENEBS_IO_CSTOR_TARGET_DIR can be used to specify the hostpath

# to be used for saving the shared content between the side cars

# of cstor volume pod.

# The default path used is /var/openebs/sparse

#- name: OPENEBS_IO_CSTOR_TARGET_DIR

# value: "/var/openebs/sparse"

# OPENEBS_IO_CSTOR_POOL_SPARSE_DIR can be used to specify the hostpath

# to be used for saving the shared content between the side cars

# of cstor pool pod. This ENV is also used to indicate the location

# of the sparse devices.

# The default path used is /var/openebs/sparse

#- name: OPENEBS_IO_CSTOR_POOL_SPARSE_DIR

# value: "/var/openebs/sparse"

# OPENEBS_IO_JIVA_POOL_DIR can be used to specify the hostpath

# to be used for default Jiva StoragePool loaded by OpenEBS

# The default path used is /var/openebs

# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG

# is set to true

#- name: OPENEBS_IO_JIVA_POOL_DIR

# value: "/var/openebs"

# OPENEBS_IO_LOCALPV_HOSTPATH_DIR can be used to specify the hostpath

# to be used for default openebs-hostpath storageclass loaded by OpenEBS

# The default path used is /var/openebs/local

# This value takes effect only if OPENEBS_IO_CREATE_DEFAULT_STORAGE_CONFIG

# is set to true

#- name: OPENEBS_IO_LOCALPV_HOSTPATH_DIR

# value: "/var/openebs/local"

- name: OPENEBS_IO_JIVA_CONTROLLER_IMAGE

value: "openebs/jiva:2.12.1"

- name: OPENEBS_IO_JIVA_REPLICA_IMAGE

value: "openebs/jiva:2.12.1"

- name: OPENEBS_IO_JIVA_REPLICA_COUNT

value: "3"

- name: OPENEBS_IO_CSTOR_TARGET_IMAGE

value: "openebs/cstor-istgt:2.12.0"

- name: OPENEBS_IO_CSTOR_POOL_IMAGE

value: "openebs/cstor-pool:2.12.0"

- name: OPENEBS_IO_CSTOR_POOL_MGMT_IMAGE

value: "openebs/cstor-pool-mgmt:2.12.0"

- name: OPENEBS_IO_CSTOR_VOLUME_MGMT_IMAGE

value: "openebs/cstor-volume-mgmt:2.12.0"

- name: OPENEBS_IO_VOLUME_MONITOR_IMAGE

value: "openebs/m-exporter:2.12.0"

- name: OPENEBS_IO_CSTOR_POOL_EXPORTER_IMAGE

value: "openebs/m-exporter:2.12.0"

- name: OPENEBS_IO_HELPER_IMAGE

value: "openebs/linux-utils:2.12.0"

# OPENEBS_IO_ENABLE_ANALYTICS if set to true sends anonymous usage

# events to Google Analytics

- name: OPENEBS_IO_ENABLE_ANALYTICS

value: "true"

- name: OPENEBS_IO_INSTALLER_TYPE

value: "openebs-operator"

# OPENEBS_IO_ANALYTICS_PING_INTERVAL can be used to specify the duration (in hours)

# for periodic ping events sent to Google Analytics.

# Default is 24h.

# Minimum is 1h. You can convert this to weekly by setting 168h

#- name: OPENEBS_IO_ANALYTICS_PING_INTERVAL

# value: "24h"

livenessProbe:

exec:

command:

- sh

- -c

- /usr/local/bin/mayactl

- version

initialDelaySeconds: 30

periodSeconds: 60

readinessProbe:

exec:

command:

- sh

- -c

- /usr/local/bin/mayactl

- version

initialDelaySeconds: 30

periodSeconds: 60

---

apiVersion: v1

kind: Service

metadata:

name: maya-apiserver-service

namespace: openebs

labels:

openebs.io/component-name: maya-apiserver-svc

spec:

ports:

- name: api

port: 5656

protocol: TCP

targetPort: 5656

selector:

name: maya-apiserver

sessionAffinity: None

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-provisioner

namespace: openebs

labels:

name: openebs-provisioner

openebs.io/component-name: openebs-provisioner

openebs.io/version: 2.12.0

spec:

selector:

matchLabels:

name: openebs-provisioner

openebs.io/component-name: openebs-provisioner

replicas: 1

strategy:

type: Recreate

rollingUpdate: null

template:

metadata:

labels:

name: openebs-provisioner

openebs.io/component-name: openebs-provisioner

openebs.io/version: 2.12.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: openebs-provisioner

imagePullPolicy: IfNotPresent

image: openebs/openebs-k8s-provisioner:2.12.0

env:

# OPENEBS_IO_K8S_MASTER enables openebs provisioner to connect to K8s

# based on this address. This is ignored if empty.

# This is supported for openebs provisioner version 0.5.2 onwards

#- name: OPENEBS_IO_K8S_MASTER

# value: "http://10.128.0.12:8080"

# OPENEBS_IO_KUBE_CONFIG enables openebs provisioner to connect to K8s

# based on this config. This is ignored if empty.

# This is supported for openebs provisioner version 0.5.2 onwards

#- name: OPENEBS_IO_KUBE_CONFIG

# value: "/home/ubuntu/.kube/config"

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,

# that provisioner should forward the volume create/delete requests.

# If not present, "maya-apiserver-service" will be used for lookup.

# This is supported for openebs provisioner version 0.5.3-RC1 onwards

#- name: OPENEBS_MAYA_SERVICE_NAME

# value: "maya-apiserver-apiservice"

# LEADER_ELECTION_ENABLED is used to enable/disable leader election. By default

# leader election is enabled.

#- name: LEADER_ELECTION_ENABLED

# value: "true"

# OPENEBS_IO_JIVA_PATCH_NODE_AFFINITY is used to enable/disable setting node affinity

# to the jiva replica deployments. Default is `enabled`. The valid values are

# `enabled` and `disabled`.

#- name: OPENEBS_IO_JIVA_PATCH_NODE_AFFINITY

# value: "enabled"

# Process name used for matching is limited to the 15 characters

# present in the pgrep output.

# So fullname can't be used here with pgrep (>15 chars).A regular expression

# that matches the entire command name has to specified.

# Anchor `^` : matches any string that starts with `openebs-provis`

# `.*`: matches any string that has `openebs-provis` followed by zero or more char

livenessProbe:

exec:

command:

- sh

- -c

- test `pgrep -c "^openebs-provisi.*"` = 1

initialDelaySeconds: 30

periodSeconds: 60

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-snapshot-operator

namespace: openebs

labels:

name: openebs-snapshot-operator

openebs.io/component-name: openebs-snapshot-operator

openebs.io/version: 2.12.0

spec:

selector:

matchLabels:

name: openebs-snapshot-operator

openebs.io/component-name: openebs-snapshot-operator

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

name: openebs-snapshot-operator

openebs.io/component-name: openebs-snapshot-operator

openebs.io/version: 2.12.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: snapshot-controller

image: openebs/snapshot-controller:2.12.0

imagePullPolicy: IfNotPresent

env:

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# Process name used for matching is limited to the 15 characters

# present in the pgrep output.

# So fullname can't be used here with pgrep (>15 chars).A regular expression

# that matches the entire command name has to specified.

# Anchor `^` : matches any string that starts with `snapshot-contro`

# `.*`: matches any string that has `snapshot-contro` followed by zero or more char

livenessProbe:

exec:

command:

- sh

- -c

- test `pgrep -c "^snapshot-contro.*"` = 1

initialDelaySeconds: 30

periodSeconds: 60

# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,

# that snapshot controller should forward the snapshot create/delete requests.

# If not present, "maya-apiserver-service" will be used for lookup.

# This is supported for openebs provisioner version 0.5.3-RC1 onwards

#- name: OPENEBS_MAYA_SERVICE_NAME

# value: "maya-apiserver-apiservice"

- name: snapshot-provisioner

image: openebs/snapshot-provisioner:2.12.0

imagePullPolicy: IfNotPresent

env:

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# OPENEBS_MAYA_SERVICE_NAME provides the maya-apiserver K8s service name,

# that snapshot provisioner should forward the clone create/delete requests.

# If not present, "maya-apiserver-service" will be used for lookup.

# This is supported for openebs provisioner version 0.5.3-RC1 onwards

#- name: OPENEBS_MAYA_SERVICE_NAME

# value: "maya-apiserver-apiservice"

# LEADER_ELECTION_ENABLED is used to enable/disable leader election. By default

# leader election is enabled.

#- name: LEADER_ELECTION_ENABLED

# value: "true"

# Process name used for matching is limited to the 15 characters

# present in the pgrep output.

# So fullname can't be used here with pgrep (>15 chars).A regular expression

# that matches the entire command name has to specified.

# Anchor `^` : matches any string that starts with `snapshot-provis`

# `.*`: matches any string that has `snapshot-provis` followed by zero or more char

livenessProbe:

exec:

command:

- sh

- -c

- test `pgrep -c "^snapshot-provis.*"` = 1

initialDelaySeconds: 30

periodSeconds: 60

---

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

annotations:

controller-gen.kubebuilder.io/version: v0.5.0

creationTimestamp: null

name: blockdevices.openebs.io

spec:

group: openebs.io

names:

kind: BlockDevice

listKind: BlockDeviceList

plural: blockdevices

shortNames:

- bd

singular: blockdevice

scope: Namespaced

versions:

- additionalPrinterColumns:

- jsonPath: .spec.nodeAttributes.nodeName

name: NodeName

type: string

- jsonPath: .spec.path

name: Path

priority: 1

type: string

- jsonPath: .spec.filesystem.fsType

name: FSType

priority: 1

type: string

- jsonPath: .spec.capacity.storage

name: Size

type: string

- jsonPath: .status.claimState

name: ClaimState

type: string

- jsonPath: .status.state

name: Status

type: string

- jsonPath: .metadata.creationTimestamp

name: Age

type: date

name: v1alpha1

schema:

openAPIV3Schema:

description: BlockDevice is the Schema for the blockdevices API

properties:

apiVersion:

description: 'APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources'

type: string

kind:

description: 'Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds'

type: string

metadata:

type: object

spec:

description: DeviceSpec defines the properties and runtime status of a BlockDevice

properties:

aggregateDevice:

description: AggregateDevice was intended to store the hierarchical information in cases of LVM. However this is currently not implemented and may need to be re-looked into for better design. To be deprecated

type: string

capacity:

description: Capacity

properties:

logicalSectorSize:

description: LogicalSectorSize is blockdevice logical-sector size in bytes

format: int32

type: integer

physicalSectorSize:

description: PhysicalSectorSize is blockdevice physical-Sector size in bytes

format: int32

type: integer

storage:

description: Storage is the blockdevice capacity in bytes

format: int64

type: integer

required:

- storage

type: object

claimRef:

description: ClaimRef is the reference to the BDC which has claimed this BD

properties:

apiVersion:

description: API version of the referent.

type: string

fieldPath:

description: 'If referring to a piece of an object instead of an entire object, this string should contain a valid JSON/Go field access statement, such as desiredState.manifest.containers[2]. For example, if the object reference is to a container within a pod, this would take on a value like: "spec.containers{name}" (where "name" refers to the name of the container that triggered the event) or if no container name is specified "spec.containers[2]" (container with index 2 in this pod). This syntax is chosen only to have some well-defined way of referencing a part of an object. TODO: this design is not final and this field is subject to change in the future.'

type: string

kind:

description: 'Kind of the referent. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds'

type: string

name:

description: 'Name of the referent. More info: https://kubernetes.io/docs/concepts/overview/working-with-objects/names/#names'

type: string

namespace:

description: 'Namespace of the referent. More info: https://kubernetes.io/docs/concepts/overview/working-with-objects/namespaces/'

type: string

resourceVersion:

description: 'Specific resourceVersion to which this reference is made, if any. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#concurrency-control-and-consistency'

type: string

uid:

description: 'UID of the referent. More info: https://kubernetes.io/docs/concepts/overview/working-with-objects/names/#uids'

type: string

type: object

details:

description: Details contain static attributes of BD like model,serial, and so forth

properties:

compliance:

description: Compliance is standards/specifications version implemented by device firmware such as SPC-1, SPC-2, etc

type: string

deviceType:

description: DeviceType represents the type of device like sparse, disk, partition, lvm, crypt

enum:

- disk

- partition

- sparse

- loop

- lvm

- crypt

- dm

- mpath

type: string

driveType:

description: DriveType is the type of backing drive, HDD/SSD

enum:

- HDD

- SSD

- Unknown

- ""

type: string

firmwareRevision:

description: FirmwareRevision is the disk firmware revision

type: string

hardwareSectorSize:

description: HardwareSectorSize is the hardware sector size in bytes

format: int32

type: integer

logicalBlockSize:

description: LogicalBlockSize is the logical block size in bytes reported by /sys/class/block/sda/queue/logical_block_size

format: int32

type: integer

model:

description: Model is model of disk

type: string

physicalBlockSize:

description: PhysicalBlockSize is the physical block size in bytes reported by /sys/class/block/sda/queue/physical_block_size

format: int32

type: integer

serial:

description: Serial is serial number of disk

type: string

vendor:

description: Vendor is vendor of disk

type: string

type: object

devlinks:

description: DevLinks contains soft links of a block device like /dev/by-id/... /dev/by-uuid/...

items:

description: DeviceDevLink holds the mapping between type and links like by-id type or by-path type link

properties:

kind:

description: Kind is the type of link like by-id or by-path.

enum:

- by-id

- by-path

type: string

links:

description: Links are the soft links

items:

type: string

type: array

type: object

type: array

filesystem:

description: FileSystem contains mountpoint and filesystem type

properties:

fsType:

description: Type represents the FileSystem type of the block device

type: string

mountPoint:

description: MountPoint represents the mountpoint of the block device.

type: string

type: object

nodeAttributes:

description: NodeAttributes has the details of the node on which BD is attached

properties:

nodeName:

description: NodeName is the name of the Kubernetes node resource on which the device is attached

type: string

type: object

parentDevice:

description: "ParentDevice was intended to store the UUID of the parent Block Device as is the case for partitioned block devices. \n For example: /dev/sda is the parent for /dev/sda1 To be deprecated"

type: string

partitioned:

description: Partitioned represents if BlockDevice has partitions or not (Yes/No) Currently always default to No. To be deprecated

enum:

- "Yes"

- "No"

type: string

path:

description: Path contain devpath (e.g. /dev/sdb)

type: string

required:

- capacity

- devlinks

- nodeAttributes

- path

type: object

status:

description: DeviceStatus defines the observed state of BlockDevice

properties:

claimState:

description: ClaimState represents the claim state of the block device

enum:

- Claimed

- Unclaimed

- Released

type: string

state:

description: State is the current state of the blockdevice (Active/Inactive/Unknown)

enum:

- Active

- Inactive

- Unknown

type: string

required:

- claimState

- state

type: object

type: object

served: true

storage: true

subresources: {}

status:

acceptedNames:

kind: ""

plural: ""

conditions: []

storedVersions: []

---

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

annotations:

controller-gen.kubebuilder.io/version: v0.5.0

creationTimestamp: null

name: blockdeviceclaims.openebs.io

spec:

group: openebs.io

names:

kind: BlockDeviceClaim

listKind: BlockDeviceClaimList

plural: blockdeviceclaims

shortNames:

- bdc

singular: blockdeviceclaim

scope: Namespaced

versions:

- additionalPrinterColumns:

- jsonPath: .spec.blockDeviceName

name: BlockDeviceName

type: string

- jsonPath: .status.phase

name: Phase

type: string

- jsonPath: .metadata.creationTimestamp

name: Age

type: date

name: v1alpha1

schema:

openAPIV3Schema:

description: BlockDeviceClaim is the Schema for the blockdeviceclaims API

properties:

apiVersion:

description: 'APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources'

type: string

kind:

description: 'Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds'

type: string

metadata:

type: object

spec:

description: DeviceClaimSpec defines the request details for a BlockDevice

properties:

blockDeviceName:

description: BlockDeviceName is the reference to the block-device backing this claim

type: string

blockDeviceNodeAttributes:

description: BlockDeviceNodeAttributes is the attributes on the node from which a BD should be selected for this claim. It can include nodename, failure domain etc.

properties:

hostName:

description: HostName represents the hostname of the Kubernetes node resource where the BD should be present

type: string

nodeName:

description: NodeName represents the name of the Kubernetes node resource where the BD should be present

type: string

type: object

deviceClaimDetails:

description: Details of the device to be claimed

properties:

allowPartition:

description: AllowPartition represents whether to claim a full block device or a device that is a partition

type: boolean

blockVolumeMode:

description: 'BlockVolumeMode represents whether to claim a device in Block mode or Filesystem mode. These are use cases of BlockVolumeMode: 1) Not specified: VolumeMode check will not be effective 2) VolumeModeBlock: BD should not have any filesystem or mountpoint 3) VolumeModeFileSystem: BD should have a filesystem and mountpoint. If DeviceFormat is specified then the format should match with the FSType in BD'

type: string

formatType:

description: Format of the device required, eg:ext4, xfs

type: string

type: object

deviceType:

description: DeviceType represents the type of drive like SSD, HDD etc.,

nullable: true

type: string

hostName:

description: Node name from where blockdevice has to be claimed. To be deprecated. Use NodeAttributes.HostName instead

type: string

resources:

description: Resources will help with placing claims on Capacity, IOPS

properties:

requests:

additionalProperties:

anyOf:

- type: integer

- type: string

pattern: ^(\+|-)?(([0-9]+(\.[0-9]*)?)|(\.[0-9]+))(([KMGTPE]i)|[numkMGTPE]|([eE](\+|-)?(([0-9]+(\.[0-9]*)?)|(\.[0-9]+))))?$

x-kubernetes-int-or-string: true

description: 'Requests describes the minimum resources required. eg: if storage resource of 10G is requested minimum capacity of 10G should be available TODO for validating'

type: object

required:

- requests

type: object

selector:

description: Selector is used to find block devices to be considered for claiming

properties:

matchExpressions:

description: matchExpressions is a list of label selector requirements. The requirements are ANDed.

items:

description: A label selector requirement is a selector that contains values, a key, and an operator that relates the key and values.

properties:

key:

description: key is the label key that the selector applies to.

type: string

operator:

description: operator represents a key's relationship to a set of values. Valid operators are In, NotIn, Exists and DoesNotExist.

type: string

values:

description: values is an array of string values. If the operator is In or NotIn, the values array must be non-empty. If the operator is Exists or DoesNotExist, the values array must be empty. This array is replaced during a strategic merge patch.

items:

type: string

type: array

required:

- key

- operator

type: object

type: array

matchLabels:

additionalProperties:

type: string

description: matchLabels is a map of {key,value} pairs. A single {key,value} in the matchLabels map is equivalent to an element of matchExpressions, whose key field is "key", the operator is "In", and the values array contains only "value". The requirements are ANDed.

type: object

type: object

type: object

status:

description: DeviceClaimStatus defines the observed state of BlockDeviceClaim

properties:

phase:

description: Phase represents the current phase of the claim

type: string

required:

- phase

type: object

type: object

served: true

storage: true

subresources: {}

status:

acceptedNames:

kind: ""

plural: ""

conditions: []

storedVersions: []

---

# This is the node-disk-manager related config.

# It can be used to customize the disks probes and filters

apiVersion: v1

kind: ConfigMap

metadata:

name: openebs-ndm-config

namespace: openebs

labels:

openebs.io/component-name: ndm-config

data:

# udev-probe is default or primary probe it should be enabled to run ndm

# filterconfigs contains configs of filters. To provide a group of include

# and exclude values add it as , separated string

node-disk-manager.config: |

probeconfigs:

- key: udev-probe

name: udev probe

state: true

- key: seachest-probe

name: seachest probe

state: false

- key: smart-probe

name: smart probe

state: true

filterconfigs:

- key: os-disk-exclude-filter

name: os disk exclude filter

state: true

exclude: "/,/etc/hosts,/boot"

- key: vendor-filter

name: vendor filter

state: true

include: ""

exclude: "CLOUDBYT,OpenEBS"

- key: path-filter

name: path filter

state: true

include: ""

exclude: "/dev/loop,/dev/fd0,/dev/sr0,/dev/ram,/dev/md,/dev/dm-,/dev/rbd,/dev/zd"

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: openebs-ndm

namespace: openebs

labels:

name: openebs-ndm

openebs.io/component-name: ndm

openebs.io/version: 2.12.0

spec:

selector:

matchLabels:

name: openebs-ndm

openebs.io/component-name: ndm

updateStrategy:

type: RollingUpdate

template:

metadata:

labels:

name: openebs-ndm

openebs.io/component-name: ndm

openebs.io/version: 2.12.0

spec:

# By default the node-disk-manager will be run on all kubernetes nodes

# If you would like to limit this to only some nodes, say the nodes

# that have storage attached, you could label those node and use

# nodeSelector.

#

# e.g. label the storage nodes with - "openebs.io/nodegroup"="storage-node"

# kubectl label node <node-name> "openebs.io/nodegroup"="storage-node"

#nodeSelector:

# "openebs.io/nodegroup": "storage-node"

serviceAccountName: openebs-maya-operator

hostNetwork: true

# host PID is used to check status of iSCSI Service when the NDM

# API service is enabled

#hostPID: true

containers:

- name: node-disk-manager

image: openebs/node-disk-manager:1.6.1

args:

- -v=4

# The feature-gate is used to enable the new UUID algorithm.

- --feature-gates="GPTBasedUUID"

# Detect mount point and filesystem changes wihtout restart.

# Uncomment the line below to enable the feature.

# --feature-gates="MountChangeDetection"

# The feature gate is used to start the gRPC API service. The gRPC server

# starts at 9115 port by default. This feature is currently in Alpha state

# - --feature-gates="APIService"

# The feature gate is used to enable NDM, to create blockdevice resources

# for unused partitions on the OS disk

# - --feature-gates="UseOSDisk"

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

volumeMounts:

- name: config

mountPath: /host/node-disk-manager.config

subPath: node-disk-manager.config

readOnly: true

# make udev database available inside container

- name: udev

mountPath: /run/udev

- name: procmount

mountPath: /host/proc

readOnly: true

- name: devmount

mountPath: /dev

- name: basepath

mountPath: /var/openebs/ndm

- name: sparsepath

mountPath: /var/openebs/sparse

env:

# namespace in which NDM is installed will be passed to NDM Daemonset

# as environment variable

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# pass hostname as env variable using downward API to the NDM container

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# specify the directory where the sparse files need to be created.

# if not specified, then sparse files will not be created.

- name: SPARSE_FILE_DIR

value: "/var/openebs/sparse"

# Size(bytes) of the sparse file to be created.

- name: SPARSE_FILE_SIZE

value: "10737418240"

# Specify the number of sparse files to be created

- name: SPARSE_FILE_COUNT

value: "0"

livenessProbe:

exec:

command:

- pgrep

- "ndm"

initialDelaySeconds: 30

periodSeconds: 60

volumes:

- name: config

configMap:

name: openebs-ndm-config

- name: udev

hostPath:

path: /run/udev

type: Directory

# mount /proc (to access mount file of process 1 of host) inside container

# to read mount-point of disks and partitions

- name: procmount

hostPath:

path: /proc

type: Directory

- name: devmount

# the /dev directory is mounted so that we have access to the devices that

# are connected at runtime of the pod.

hostPath:

path: /dev

type: Directory

- name: basepath

hostPath:

path: /var/openebs/ndm

type: DirectoryOrCreate

- name: sparsepath

hostPath:

path: /var/openebs/sparse

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-ndm-operator

namespace: openebs

labels:

name: openebs-ndm-operator

openebs.io/component-name: ndm-operator

openebs.io/version: 2.12.0

spec:

selector:

matchLabels:

name: openebs-ndm-operator

openebs.io/component-name: ndm-operator

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

name: openebs-ndm-operator

openebs.io/component-name: ndm-operator

openebs.io/version: 2.12.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: node-disk-operator

image: openebs/node-disk-operator:1.6.1

imagePullPolicy: IfNotPresent

env:

- name: WATCH_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

# the service account of the ndm-operator pod

- name: SERVICE_ACCOUNT

valueFrom:

fieldRef:

fieldPath: spec.serviceAccountName

- name: OPERATOR_NAME

value: "node-disk-operator"

- name: CLEANUP_JOB_IMAGE

value: "openebs/linux-utils:2.12.0"

# OPENEBS_IO_IMAGE_PULL_SECRETS environment variable is used to pass the image pull secrets

# to the cleanup pod launched by NDM operator

#- name: OPENEBS_IO_IMAGE_PULL_SECRETS

# value: ""

livenessProbe:

httpGet:

path: /healthz

port: 8585

initialDelaySeconds: 15

periodSeconds: 20

readinessProbe:

httpGet:

path: /readyz

port: 8585

initialDelaySeconds: 5

periodSeconds: 10

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-admission-server

namespace: openebs

labels:

app: admission-webhook

openebs.io/component-name: admission-webhook

openebs.io/version: 2.12.0

spec:

replicas: 1

strategy:

type: Recreate

rollingUpdate: null

selector:

matchLabels:

app: admission-webhook

template:

metadata:

labels:

app: admission-webhook

openebs.io/component-name: admission-webhook

openebs.io/version: 2.12.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: admission-webhook

image: openebs/admission-server:2.12.0

imagePullPolicy: IfNotPresent

args:

- -alsologtostderr

- -v=2

- 2>&1

env:

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: ADMISSION_WEBHOOK_NAME

value: "openebs-admission-server"

- name: ADMISSION_WEBHOOK_FAILURE_POLICY

value: "Fail"

# Process name used for matching is limited to the 15 characters

# present in the pgrep output.

# So fullname can't be used here with pgrep (>15 chars).A regular expression

# Anchor `^` : matches any string that starts with `admission-serve`

# `.*`: matche any string that has `admission-serve` followed by zero or more char

# that matches the entire command name has to specified.

livenessProbe:

exec:

command:

- sh

- -c

- test `pgrep -c "^admission-serve.*"` = 1

initialDelaySeconds: 30

periodSeconds: 60

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: openebs-localpv-provisioner

namespace: openebs

labels:

name: openebs-localpv-provisioner

openebs.io/component-name: openebs-localpv-provisioner

openebs.io/version: 2.12.0

spec:

selector:

matchLabels:

name: openebs-localpv-provisioner

openebs.io/component-name: openebs-localpv-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

name: openebs-localpv-provisioner

openebs.io/component-name: openebs-localpv-provisioner

openebs.io/version: 2.12.0

spec:

serviceAccountName: openebs-maya-operator

containers:

- name: openebs-provisioner-hostpath

imagePullPolicy: IfNotPresent

image: openebs/provisioner-localpv:2.12.0

args:

- "--bd-time-out=$(BDC_BD_BIND_RETRIES)"

env:

# OPENEBS_IO_K8S_MASTER enables openebs provisioner to connect to K8s

# based on this address. This is ignored if empty.

# This is supported for openebs provisioner version 0.5.2 onwards

#- name: OPENEBS_IO_K8S_MASTER

# value: "http://10.128.0.12:8080"

# OPENEBS_IO_KUBE_CONFIG enables openebs provisioner to connect to K8s

# based on this config. This is ignored if empty.

# This is supported for openebs provisioner version 0.5.2 onwards

#- name: OPENEBS_IO_KUBE_CONFIG

# value: "/home/ubuntu/.kube/config"

# This sets the number of times the provisioner should try

# with a polling interval of 5 seconds, to get the Blockdevice

# Name from a BlockDeviceClaim, before the BlockDeviceClaim

# is deleted. E.g. 12 * 5 seconds = 60 seconds timeout

- name: BDC_BD_BIND_RETRIES

value: "12"

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: OPENEBS_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

# OPENEBS_SERVICE_ACCOUNT provides the service account of this pod as

# environment variable

- name: OPENEBS_SERVICE_ACCOUNT

valueFrom:

fieldRef:

fieldPath: spec.serviceAccountName

- name: OPENEBS_IO_ENABLE_ANALYTICS

value: "true"

- name: OPENEBS_IO_INSTALLER_TYPE

value: "openebs-operator"

- name: OPENEBS_IO_HELPER_IMAGE

value: "openebs/linux-utils:2.12.0"

- name: OPENEBS_IO_BASE_PATH

value: "/var/openebs/local"

# LEADER_ELECTION_ENABLED is used to enable/disable leader election. By default

# leader election is enabled.

#- name: LEADER_ELECTION_ENABLED

# value: "true"

# OPENEBS_IO_IMAGE_PULL_SECRETS environment variable is used to pass the image pull secrets

# to the helper pod launched by local-pv hostpath provisioner

#- name: OPENEBS_IO_IMAGE_PULL_SECRETS

# value: ""

# Process name used for matching is limited to the 15 characters

# present in the pgrep output.

# So fullname can't be used here with pgrep (>15 chars).A regular expression

# that matches the entire command name has to specified.

# Anchor `^` : matches any string that starts with `provisioner-loc`

# `.*`: matches any string that has `provisioner-loc` followed by zero or more char

livenessProbe:

exec:

command:

- sh

- -c

- test `pgrep -c "^provisioner-loc.*"` = 1

initialDelaySeconds: 30

periodSeconds: 60

---

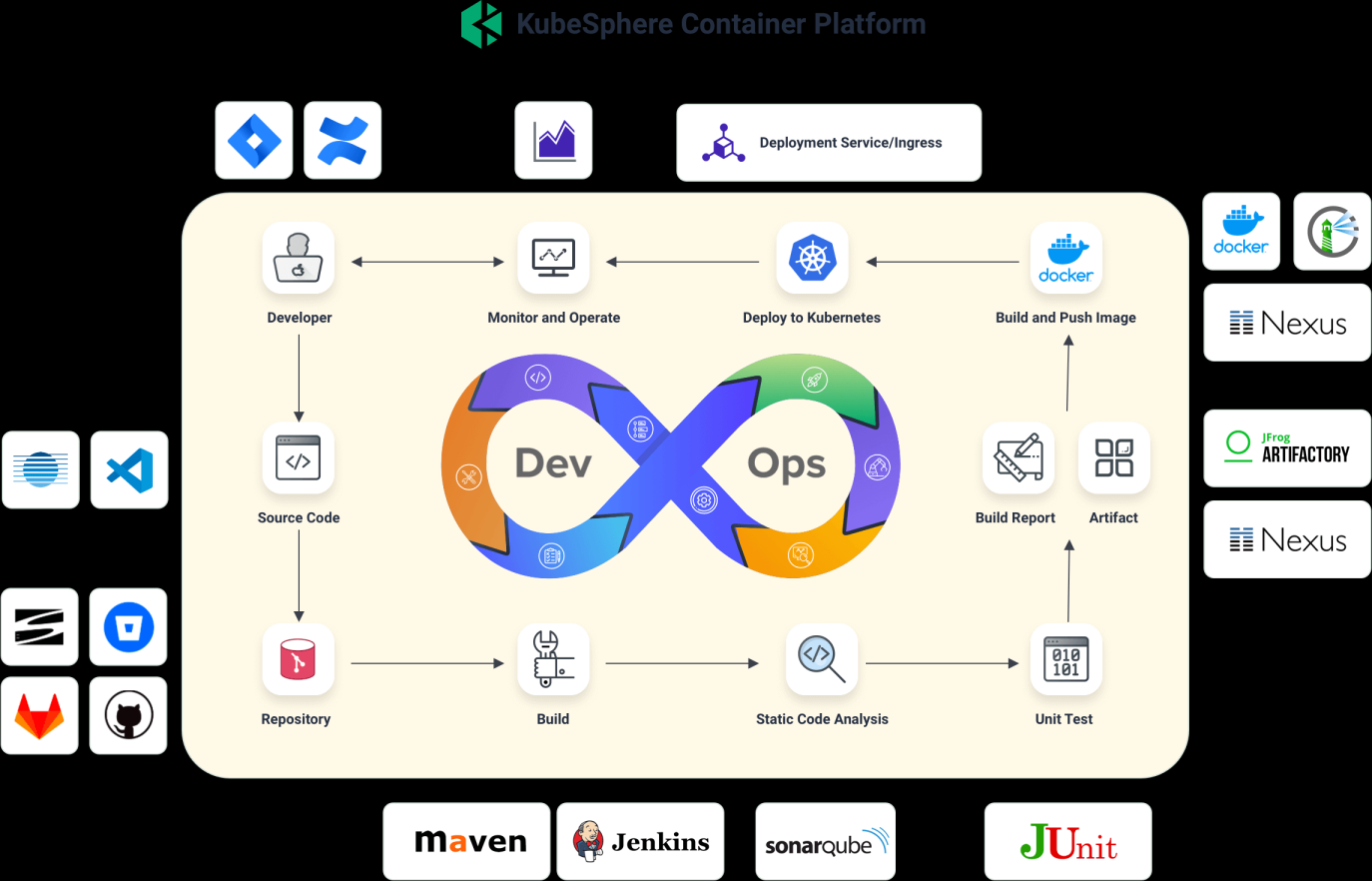

Kubesphere 3.0.0

https://v3-0.docs.kubesphere.io/zh/docs/pluggable-components/devops/

前提:

Kubernetes 版本 1.15.x,1.16.x,1.17.x,1.18.x

可用 CPU > 1 核;内存 > 2 G

Kubernetes 集群已配置默认 StorageClass(请使用 kubectl get sc 进行确认)。

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/kubesphere-installer.yaml

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/cluster-configuration.yaml

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

kubectl get pod --all-namespaces

kubectl get svc/ks-console -n kubesphere-system

Kubesphere 3.1.1

#需要有默认的storageClass

kubectl get sc

# 文档https://v2-1.docs.kubesphere.io/docs/zh-CN/appendix/install-openebs/

kubectl get node -o wide

kubectl describe node k8s-node1 | grep Taint

# Taints: node-role.kubernetes.io/master:NoSchedule

# 需要去掉 Taints

kubectl taint nodes k8s-node1 node-role.kubernetes.io/master:NoSchedule-

# openebs

kubectl create ns openebs

# 已经失效

kubectl apply -f https://openebs.github.io/charts/openebs-operator-1.5.0.yaml

# 查看 https://blog.csdn.net/RookiexiaoMu_a/article/details/119859930

# 等待运行成功

get pod --all-namespaces

kubectl get sc

# 设置默认的storageClass

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

# 查看 OpenEBS 相关 Pod 的状态,若 Pod 的状态都是 running,则说明存储安装成功

kubectl get pod -n openebs

# 恢复 Taints

kubectl taint nodes k8s-node1 node-role.kubernetes.io/master=:NoSchedule准备完毕

######################################

# 准备完毕

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

# 所有 Pod 正常运行: 9个 kubesphere-system,需要很久

kubectl get pod --all-namespaces

kubectl get svc/ks-console -n kubesphere-system

# 确保在安全组中打开了端口 30880,并通过 NodePort (IP:30880)

使用默认帐户和密码 (admin/P@88w0rd) 访问 Web 控制台。插件安装

注意work节点要8GB,5个CPU

# 失败

kubectl edit cm -n kubesphere-system ks-installer

# 错了,没必要这些,直接去官网看 https://v3-1.docs.kubesphere.io/zh/docs/pluggable-components/metrics-server/

# https://v3-1.docs.kubesphere.io/zh/docs/pluggable-components/metrics-server/

wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml

vi cluster-configuration.yaml

metrics_server:

enabled: true # 将“false”更改为“true”。

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

# 安装后启用

以 admin 身份登录控制台。点击左上角平台管理,选择集群管理

自定义资源 CRD 在搜索栏中输入 clusterconfiguratio 点击搜索结果查看详情页

在资源列表中,点击 ks-installer 右侧的 ,选择编辑配置文件

metrics_server:

enabled: true # 将“false”更改为“true”。

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

kubectl get pod -n kube-system

# metrics-server-6c767c9f94-hfsb7 open:

devops(sonarqube) console notification alerting

close:

metrics_server logging openpitrix

metrics_server:

enabled: false

console:

enableMultiLogin: true

port: 30880

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

devops: # (CPU: 0.47 Core, Memory: 8.6 G

enabled: true

jenkinsMemoryLim: 2Gi # Jenkins memory limit.

jenkinsMemoryReq: 1000Mi # Jenkins memory request.

jenkinsVolumeSize: 8Gi # Jenkins volume size.

jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters.

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2gkubesphere 3.2.0

可以devops,集群要求很高,kuboard要求不高

all In one 全部给安装好

# 开始安装