基础概念

https://www.yuque.com/leifengyang/oncloud/vgf9wk

一、基础

helm

artifacthub.io 类似于 dockerhub

nginx

map $http_upgrade $connection_upgrade {

default upgrade;

'' close;

}

server{

listen 80;

server_name localhost 192.168.1.13;

location / {

# root D:/Environment/nginx-1.18.0/conf/conf.d;

# index index.html index.htm index.php;

proxy_set_header Host $host;

# proxy_pass http://127.0.0.1:8083;

proxy_pass http://192.168.56.100:30880;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_read_timeout 86400;

#proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_next_upstream error timeout invalid_header http_500 http_502 http_503 http_504;

proxy_max_temp_file_size 0;

proxy_connect_timeout 90;

proxy_send_timeout 90;

#proxy_read_timeout 90;

proxy_buffer_size 4k;

proxy_buffers 4 32k;

proxy_busy_buffers_size 64k;

proxy_temp_file_write_size 64k;

}

}二、部署应用

工作负载:部署(无状态微服务)、有状态副本集(数据库)、守护进程集(每个机器都要的日志收集器 )

数据存储

网络访问

中间件部署

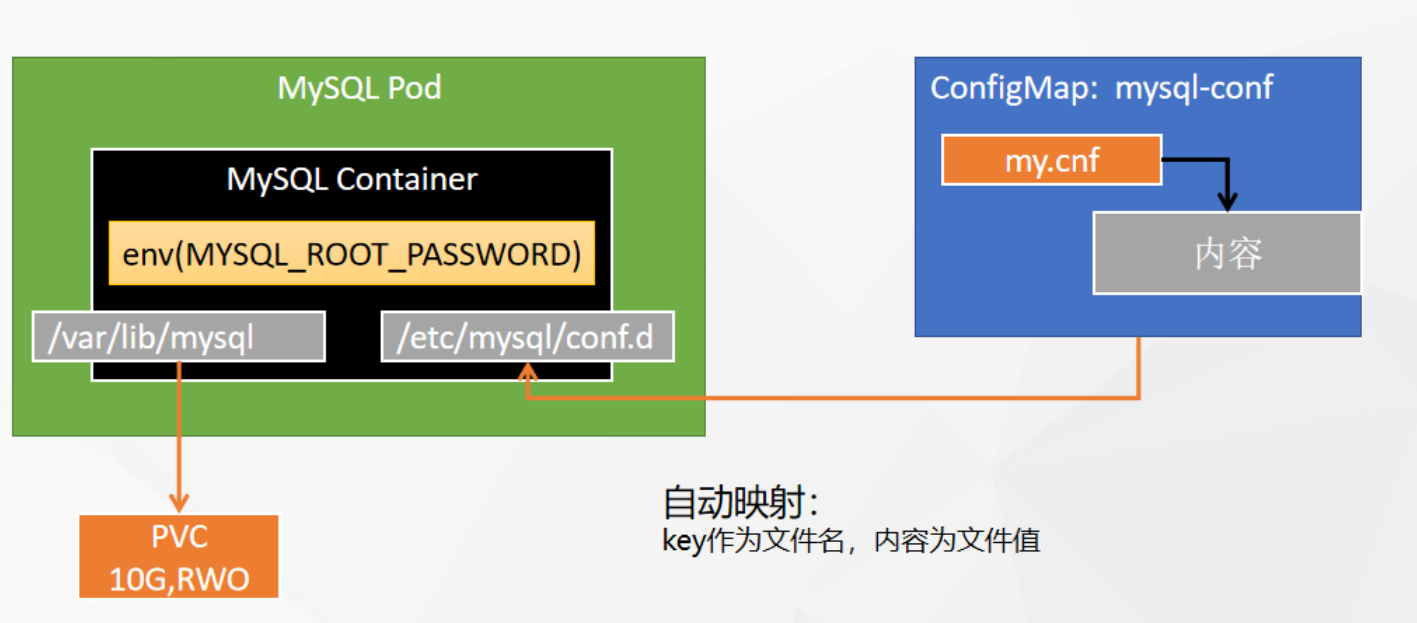

mysql

docker run -p 3306:3306 --name mysql-01 \

-v /mydata/mysql/log:/var/log/mysql \

-v /mydata/mysql/data:/var/lib/mysql \

-v /mydata/mysql/conf:/etc/mysql/conf.d \

-e MYSQL_ROOT_PASSWORD=root \

--restart=always \

-d mysql:5.7 [client]

default-character-set=utf8mb4

[mysql]

default-character-set=utf8mb4

[mysqld]

init_connect='SET collation_connection = utf8mb4_unicode_ci'

init_connect='SET NAMES utf8mb4'

character-set-server=utf8mb4

collation-server=utf8mb4_unicode_ci

skip-character-set-client-handshake

skip-name-resolve

配置文件(my.cnf)

存储挂载数据卷

- 单节点读写:一般有状态应用

- 多节点只读

- 多节点读写

工作负载有状态应用

- 搜索镜像使用默认端口

- 添加环境变量MYSQL_ROOT_PASSWORD=123456

- 同步主机时间

- 挂载数据卷,读写方式到/var/lib/mysql

- 挂载配置文件,只读/etc/mysql/conf.d,修改后会同步到pod中(需要支持热更新)

服务

- 会默认提供服务(DNS:his-mysql-b88d.his),集群内部能够直接通过域名访问,可以删除

- 工作负载服务

- 内部(endpoint IP),外部(virtual IP)好用

- labelSelector ( app : his-mysql)

- 容器、服务端口都是3306

- 需要外网访问则使用NodePort(NodePort 不能使用enpoint Ip),会暴露3306:30992,使用30992访问

redis

#创建配置文件

## 1、准备redis配置文件内容

mkdir -p /mydata/redis/conf && vim /mydata/redis/conf/redis.conf

##配置示例

appendonly yes

port 6379

bind 0.0.0.0

#docker启动redis

docker run -d -p 6379:6379 --restart=always \

-v /mydata/redis/conf/redis.conf:/etc/redis/redis.conf \

-v /mydata/redis-01/data:/data \

--name redis-01 redis:6.2.5 \

redis-server /etc/redis/redis.conf

配置,redis.conf

有状态工作负载

镜像redis,默认端口

启动命令 redis-server /etc/redis/redis.conf

同步主机时区

存储卷模板,单节点读写 /data,启动多个会自动创建存储卷,每个redis数据不同

配置文件redis.conf,只读,/etc/redis

服务修改

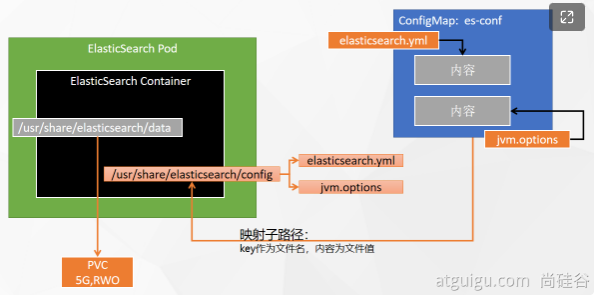

elasticSearch

# 创建数据目录

mkdir -p /mydata/es-01 && chmod 777 -R /mydata/es-01

# 容器启动

docker run --restart=always -d -p 9200:9200 -p 9300:9300 \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms512m -Xmx512m" \

-v es-config:/usr/share/elasticsearch/config \

-v /mydata/es-01/data:/usr/share/elasticsearch/data \

--name es-01 \

elasticsearch:7.13.4

# 可能还没有权限

chmod 777 -R /mydata/es-01

docker exec -it es-01 /bin/bash

cd config

elasticsearch.yml

cluster.name: "docker-cluster"

network.host: 0.0.0.0

jvm.options

不能直接添加配置,否则会全部覆盖,只想修改jvm.options,elasticsearch.yaml

添加配置

有状态工作负载

镜像elasticsearch:7.13.4

环境变量

- discovery.type=single-node

- ES_JAVA_OPTS=”-Xms512m -Xmx512m”

同步主机时区

存储卷模板,读写/usr/share/elasticsearch/data

配置文件,读写,/usr/share/elasticsearch/config 则是全量覆盖,

- /usr/share/elasticsearch/config/elasticsearch.yml,设置elasticsearch.yml为子路径,选择特定的键和路径,elasticsearch.yml : elasticsearch.yml

- /usr/share/elasticsearch/config/jvm.options,设置jvm.options为子路径,选择特定的键和路径,jvm.options : jvm.options

服务

端口http 9200 / tcp 9300

jvm.options

################################################################

##

## JVM configuration

##

################################################################

##

## WARNING: DO NOT EDIT THIS FILE. If you want to override the

## JVM options in this file, or set any additional options, you

## should create one or more files in the jvm.options.d

## directory containing your adjustments.

##

## See https://www.elastic.co/guide/en/elasticsearch/reference/current/jvm-options.html

## for more information.

##

################################################################

################################################################

## IMPORTANT: JVM heap size

################################################################

##

## The heap size is automatically configured by Elasticsearch

## based on the available memory in your system and the roles

## each node is configured to fulfill. If specifying heap is

## required, it should be done through a file in jvm.options.d,

## and the min and max should be set to the same value. For

## example, to set the heap to 4 GB, create a new file in the

## jvm.options.d directory containing these lines:

##

## -Xms4g

## -Xmx4g

##

## See https://www.elastic.co/guide/en/elasticsearch/reference/current/heap-size.html

## for more information

##

################################################################

################################################################

## Expert settings

################################################################

##

## All settings below here are considered expert settings. Do

## not adjust them unless you understand what you are doing. Do

## not edit them in this file; instead, create a new file in the

## jvm.options.d directory containing your adjustments.

##

################################################################

## GC configuration

8-13:-XX:+UseConcMarkSweepGC

8-13:-XX:CMSInitiatingOccupancyFraction=75

8-13:-XX:+UseCMSInitiatingOccupancyOnly

## G1GC Configuration

# NOTE: G1 GC is only supported on JDK version 10 or later

# to use G1GC, uncomment the next two lines and update the version on the

# following three lines to your version of the JDK

# 10-13:-XX:-UseConcMarkSweepGC

# 10-13:-XX:-UseCMSInitiatingOccupancyOnly

14-:-XX:+UseG1GC

## JVM temporary directory

-Djava.io.tmpdir=${ES_TMPDIR}

## heap dumps

# generate a heap dump when an allocation from the Java heap fails; heap dumps

# are created in the working directory of the JVM unless an alternative path is

# specified

-XX:+HeapDumpOnOutOfMemoryError

# specify an alternative path for heap dumps; ensure the directory exists and

# has sufficient space

-XX:HeapDumpPath=data

# specify an alternative path for JVM fatal error logs

-XX:ErrorFile=logs/hs_err_pid%p.log

## JDK 8 GC logging

8:-XX:+PrintGCDetails

8:-XX:+PrintGCDateStamps

8:-XX:+PrintTenuringDistribution

8:-XX:+PrintGCApplicationStoppedTime

8:-Xloggc:logs/gc.log

8:-XX:+UseGCLogFileRotation

8:-XX:NumberOfGCLogFiles=32

8:-XX:GCLogFileSize=64m

# JDK 9+ GC logging

9-:-Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m应用商店

应用仓库:helm

kubesphere企业空间添加仓库位置:https://charts.bitnami.com/bitnami

应用负载,应用,部署新应用,应用模板,bitnami

Ruoyi-cloud

nacos修改application.properties,为mysql数据库

startup.cmd -m standalone

修改nacos中所有的mysql数据库信息、redis

上云考虑:无状态应用(制作镜像)、有状态应用(数据存储)、网络、应用配置

nacos集群【依赖mysql】

nacos依赖数据库,存活指针

nacos集群部署,cluster.conf,所有ip+port,使用固定域名

application.properties

不全

spring.datasource.platform=mysql

db.num=1

db.url.0=jdbc:mysql://his-nacos.his:3306/nacos?characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true

db.user=nacos_devtest

db.password=youdontknowcluster.conf

集群会出错,还是先用单节点吧

192.168.16.101:8847

192.168.16.102

192.168.16.103

his-nacos-v1-0.his-nacos.his.svc.cluster.local:8848

his-nacos-v1-1.his-nacos.his.svc.cluster.local:8848测试:最直接创建有状态服务,至少1400M,nacos/nacos-server:v2.0.3,tcp8848,9848?,9849,同步主机时区

his-nacos.his将会解析为:

his-nacos-v1-0.his-nacos.his.svc.cluster.local

创建配置:

application.properties(修改数据库信息)

cluster.conf(域名信息)

都只读子目录挂载到/home/nacos/conf

环境变量MODE standalone

健康检查器,存活检查,8848端口,延迟20秒,超时3s

通过容器日志查看是否正确

Dockerfile

FROM openjdk:8-jdk

LABEL maintainer=leifengyang

#docker run -e PARAMS="--server.port 9090"

ENV PARAMS="--server.port=8080 --spring.profiles.active=prod --spring.cloud.nacos.discovery.server-addr=his-nacos.his:8848 --spring.cloud.nacos.config.server-addr=his-nacos.his:8848 --spring.cloud.nacos.config.namespace=prod --spring.cloud.nacos.config.file-extension=yml"

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

COPY target/*.jar /app.jar

EXPOSE 8080

#

ENTRYPOINT ["/bin/sh","-c","java -Dfile.encoding=utf8 -Djava.security.egd=file:/dev/./urandom -jar app.jar ${PARAMS}"]nacos使用prod命名空间

- maven打包成jar

- 上传服务器

- docker根据dockerfile打包镜像,推送到阿里镜像仓库(habor)

- docker build -t ruoyi-auth:v1.0 -f Dockerfile .

docker login --username=forsum**** registry.cn-hangzhou.aliyuncs.comdocker tag [ImageId] registry.cn-hangzhou.aliyuncs.com/lfy_ruoyi/镜像名:[镜像版本号]docker push registry.cn-hangzhou.aliyuncs.com/lfy_ruoyi/镜像名:[镜像版本号]

- k8s 创建无状态服务,tcp端口(gateway的sentinel配置上nacos)

- nginx pod 中 server_name _;监听所有

三、DevOps

CI持续集成、CD持续交付

尚医通上云

| 中间件 | 集群内地址 | 外部访问地址 |

|---|---|---|

| Nacos | his-nacos.his:8848 | http://139.198.165.238:30349/nacos |

| MySQL | his-mysql.his:3306 | 139.198.165.238:31840 |

| Redis | his-redis.his:6379 | 139.198.165.238:31968 |

| Sentinel | his-sentinel.his:8080 | 31555 |

| MongoDB | mongodb.his:27017 | 30107 |

| RabbitMQ | rabbitmq-headless.his | 30459 |

| ElasticSearch | his-es.his:9200 | 139.198.165.238:31300 |

sentinel: leifengyang/sentinel:1.8.2,再外部访问

Mongodb: 应用》部署新应用》应用模板》bitnami,关闭账号密码

nacos配置:service-cmn-prod.yml等

CICD

基本流程

指定容器->git拉取->echo

maven 修改仓库配置 agent

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/repositories/central/</url>

<mirrorOf>central</mirrorOf>

</mirror>流水线很慢

kubectl get pod -A | grep maven

kubectl describe pod -n kubesphere-devops-system maven-sss

# 内存占用

kubectl top pods -A

kubectl top nodes流水线步骤:

- git clone

- mvn clean package -Dmaven.test.skip=true

- docker build -t hospital-manage:latest -f hospital-manage/Dockerfile ./hospital-manage

- docker login / tag / push 容器镜像服务,使用environment

stage('推送service-sms镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag service-sms:latest $REGISTRY/$DOCKERHUB_NAMESPACE/service-sms:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/service-sms:SNAPSHOT-$BUILD_NUMBER'

}

}

}

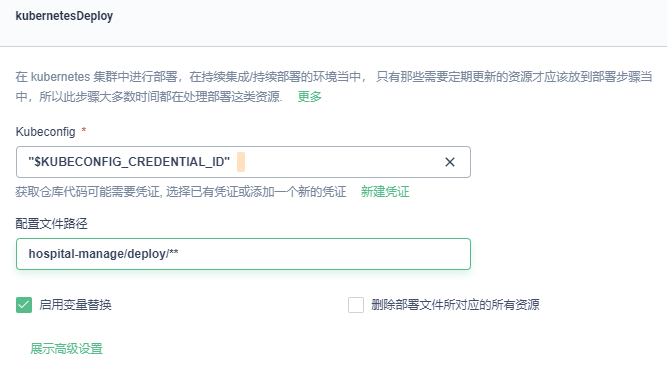

}- 部署 kubernetesDeploy

- env: KUBECONFIG_CREDENTIAL_ID = ‘demo-kubeconfig’

- kubeconfig: “$KUBECONFIG_CREDENTIAL_ID”

- 项目中deploy.yml 中的 imagePullSecrets, 会使用到his的密钥, dockerconfigjson

kubernetesDeploy

his的docker仓库密钥

启动探针: /actuator/health检查 down,nacos全部添加(或者去掉就绪探针,或者升级到springboot2.3)

management:

endpoints:

web:

exposure:

include: "*"

endpoint:

health:

show-details: always添加邮件通知,也可使用env(需要配置邮件smtp功能,查看官网)

JenkinsFile

后端

pipeline {

agent {

node {

label 'maven'

}

}

stages {

stage('clone') {

agent none

steps {

container('maven') {

git(url: 'https://gitee.com/mingyuefusu/yygh-parent.git', credentialsId: 'gitee-mingyue', branch: 'master', changelog: true, poll: false)

sh 'ls'

}

}

}

stage('build') {

agent none

steps {

container('maven') {

sh 'mvn clean package -Dmaven.test.skip=true'

}

}

}

stage('default-2') {

parallel {

stage('构建hospital-manage镜像') {

agent none

steps {

container('maven') {

sh 'ls hospital-manage/target'

sh 'docker build -t hospital-manage:latest -f hospital-manage/Dockerfile ./hospital-manage/'

}

}

}

}

}

stage('default-3') {

parallel {

stage('推送hospital-manage镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag hospital-manage:latest $REGISTRY/$DOCKERHUB_NAMESPACE/hospital-manage:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/hospital-manage:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

}

}

stage('default-4') {

parallel {

stage('hospital-manage - 部署到dev环境') {

agent none

steps {

kubernetesDeploy(configs: 'hospital-manage/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

}

}

stage('deploy to production') {

agent none

steps {

kubernetesDeploy(configs: 'deploy/prod-ol/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

}

environment {

DOCKER_CREDENTIAL_ID = 'dockerhub-id'

GITHUB_CREDENTIAL_ID = 'github-id'

KUBECONFIG_CREDENTIAL_ID = 'demo-kubeconfig'

REGISTRY = 'registry.cn-qingdao.aliyuncs.com'

DOCKERHUB_NAMESPACE = 'ming_k8s_test'

GITHUB_ACCOUNT = 'kubesphere'

APP_NAME = 'devops-java-sample'

ALIYUNHUB_NAMESPACE = 'ming_k8s_test'

}

parameters {

string(name: 'TAG_NAME', defaultValue: '', description: '')

}

}前端

pipeline {

agent {

node {

label 'nodejs'

}

}

stages {

stage('拉取代码') {

agent none

steps {

container('nodejs') {

git(url: 'https://gitee.com/leifengyang/yygh-admin.git', credentialsId: 'gitee-id', branch: 'master', changelog: true, poll: false)

sh 'ls -al'

}

}

}

stage('项目编译') {

agent none

steps {

container('nodejs') {

sh 'npm i node-sass --sass_binary_site=https://npm.taobao.org/mirrors/node-sass/'

sh 'npm install --registry=https://registry.npm.taobao.org'

sh 'npm run build'

sh 'ls'

}

}

}

stage('构建镜像') {

agent none

steps {

container('nodejs') {

sh 'ls'

sh 'docker build -t yygh-admin:latest -f Dockerfile .'

}

}

}

stage('推送镜像') {

agent none

steps {

container('nodejs') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag yygh-admin:latest $REGISTRY/$DOCKERHUB_NAMESPACE/yygh-admin:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/yygh-admin:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

stage('部署到dev环境') {

agent none

steps {

kubernetesDeploy(configs: 'deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

//1、配置全系统的邮件: 全系统的监控

//2、修改ks-jenkins的配置,里面的邮件; 流水线发邮件

stage('发送确认邮件') {

agent none

steps {

mail(to: '17512080612@163.com', subject: 'yygh-admin构建结果', body: "构建成功了 $BUILD_NUMBER")

}

}

}

environment {

DOCKER_CREDENTIAL_ID = 'dockerhub-id'

GITHUB_CREDENTIAL_ID = 'github-id'

KUBECONFIG_CREDENTIAL_ID = 'demo-kubeconfig'

REGISTRY = 'registry.cn-hangzhou.aliyuncs.com'

DOCKERHUB_NAMESPACE = 'lfy_hello'

GITHUB_ACCOUNT = 'kubesphere'

APP_NAME = 'devops-java-sample'

ALIYUNHUB_NAMESPACE = 'lfy_hello'

}

}Deploy

后端

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: server-gateway

name: server-gateway

namespace: his #一定要写名称空间

spec:

progressDeadlineSeconds: 600

replicas: 1

selector:

matchLabels:

app: server-gateway

strategy:

rollingUpdate:

maxSurge: 50%

maxUnavailable: 50%

type: RollingUpdate

template:

metadata:

labels:

app: server-gateway

spec:

imagePullSecrets:

- name: aliyun-docker-hub #提前在项目下配置访问阿里云的账号密码

containers:

- image: $REGISTRY/$ALIYUNHUB_NAMESPACE/server-gateway:SNAPSHOT-$BUILD_NUMBER

# readinessProbe:

# httpGet:

# path: /actuator/health

# port: 8080

# timeoutSeconds: 10

# failureThreshold: 30

# periodSeconds: 5

imagePullPolicy: Always

name: app

ports:

- containerPort: 8080

protocol: TCP

resources:

limits:

cpu: 300m

memory: 600Mi

requests:

cpu: 50m

memory: 180Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

terminationGracePeriodSeconds: 30

---

apiVersion: v1

kind: Service

metadata:

labels:

app: server-gateway

name: server-gateway

namespace: his

spec:

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

nodePort: 32607

selector:

app: server-gateway

sessionAffinity: None

type: NodePort前端

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: yygh-admin

name: yygh-admin

namespace: his #一定要写名称空间

spec:

progressDeadlineSeconds: 600

replicas: 1

selector:

matchLabels:

app: yygh-admin

strategy:

rollingUpdate:

maxSurge: 50%

maxUnavailable: 50%

type: RollingUpdate

template:

metadata:

labels:

app: yygh-admin

spec:

imagePullSecrets:

- name: aliyun-docker-hub #提前在项目下配置访问阿里云的账号密码

containers:

- image: $REGISTRY/$ALIYUNHUB_NAMESPACE/yygh-admin:SNAPSHOT-$BUILD_NUMBER

# readinessProbe:

# httpGet:

# path: /actuator/health

# port: 8080

# timeoutSeconds: 10

# failureThreshold: 30

# periodSeconds: 5

imagePullPolicy: Always

name: app

ports:

- containerPort: 80

protocol: TCP

resources:

limits:

cpu: 300m

memory: 600Mi

requests:

cpu: 50m

memory: 180Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

terminationGracePeriodSeconds: 30

---

apiVersion: v1

kind: Service

metadata:

labels:

app: yygh-admin

name: yygh-admin

namespace: his

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 32248

selector:

app: yygh-admin

sessionAffinity: None

type: NodePortdangxiao

基础设施

- Mysql(存储卷、配置文件等、通过应用商店)

- redis(应用商店)

- nacos

- skywalking

平台凭证

- Gitee凭证

- 阿里云镜像仓库

- demo-kubeconfig,部署使用

- 项目密钥,仓库密钥

项目相关

- Dockerfile

- 流水线的Jenkinsfile

- 每个服务的Deploy.yml

ingress或者nginx配置反向代理

基础设置

| 中间件 | 集群内地址 | 外部访问地址 |

|---|---|---|

| Nacos | dangxiao-nacos.dangxiao:8848 | http://139.198.165.238:30349/nacos |

| MySQL | dangxiao-mysql.dangxiao:3306 | 139.198.165.238:31840 |

| Redis | dangxiao-redis.dangxiao:6379 | 139.198.165.238:31968 |

| Sentinel | dangxiao-sentinel.dangxiao:8080 | 31555 |

| MongoDB | mongodb.dangxiao:27017 | 30107 |

| RabbitMQ | rabbitmq-headless.dangxiao | 30459 |

| ElasticSearch | dangxiao-es.dangxiao:9200 | 139.198.165.238:31300 |

Nacos

application.properties

修改mysql配置

server.servlet.contextPath=/nacos

### Default web server port:

server.port=8848

spring.datasource.platform=mysql

### Count of DB:

db.num=1

### Connect URL of DB:

db.url.0=jdbc:mysql://dangxiao-mysql.dangxiao:3306/psedu_nacos?characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useUnicode=true&useSSL=false&serverTimezone=UTC

db.user.0=root

db.password.0=123456

### Connection pool configuration: hikariCP

db.pool.config.connectionTimeout=30000

db.pool.config.validationTimeout=10000

db.pool.config.maximumPoolSize=20

db.pool.config.minimumIdle=2

nacos.naming.empty-service.auto-clean=true

nacos.naming.empty-service.clean.initial-delay-ms=50000

nacos.naming.empty-service.clean.period-time-ms=30000

### Metrics for elastic search

management.metrics.export.elastic.enabled=false

#management.metrics.export.elastic.host=http://localhost:9200

### Metrics for influx

management.metrics.export.influx.enabled=false

### If turn on the access log:

server.tomcat.accesslog.enabled=true

### The access log pattern:

server.tomcat.accesslog.pattern=%h %l %u %t "%r" %s %b %D %{User-Agent}i %{Request-Source}i

### The directory of access log:

server.tomcat.basedir=

nacos.security.ignore.urls=/,/error,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-ui/public/**,/v1/auth/**,/v1/console/health/**,/actuator/**,/v1/console/server/**

### The auth system to use, currently only 'nacos' and 'ldap' is supported:

nacos.core.auth.system.type=nacos

### If turn on auth system:

nacos.core.auth.enabled=false

### The token expiration in seconds:

nacos.core.auth.default.token.expire.seconds=18000

### The default token:

nacos.core.auth.default.token.secret.key=SecretKey012345678901234567890123456789012345678901234567890123456789

### Turn on/off caching of auth information. By turning on this switch, the update of auth information would have a 15 seconds delay.

nacos.core.auth.caching.enabled=true

### Since 1.4.1, Turn on/off white auth for user-agent: nacos-server, only for upgrade from old version.

nacos.core.auth.enable.userAgentAuthWhite=false

### Since 1.4.1, worked when nacos.core.auth.enabled=true and nacos.core.auth.enable.userAgentAuthWhite=false.

### The two properties is the white list for auth and used by identity the request from other server.

nacos.core.auth.server.identity.key=serverIdentity

nacos.core.auth.server.identity.value=security

#*************** Istio Related Configurations ***************#

### If turn on the MCP server:

nacos.istio.mcp.server.enabled=false有状态应用

nacos/nacos-server:v2.0.3

tcp: 8848 9848 9849

环境变量MODE standalone

健康检查器,存活检查/nacos,8848端口,延迟20秒,超时3s

只读子目录挂载到/home/nacos/conf/application.yaml

通过容器日志查看是否正确

项目相关

deploy

需要使用到仓库密钥

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: psedu-gateway

name: psedu-gateway

namespace: dangxiao #一定要写名称空间

spec:

progressDeadlineSeconds: 600

replicas: 1

selector:

matchLabels:

app: psedu-gateway

strategy:

rollingUpdate:

maxSurge: 50%

maxUnavailable: 50%

type: RollingUpdate

template:

metadata:

labels:

app: psedu-gateway

spec:

imagePullSecrets:

- name: aliyun-docker-hub #提前在项目下配置访问阿里云的账号密码

containers:

- image: $REGISTRY/$ALIYUNHUB_NAMESPACE/psedu-gateway:SNAPSHOT-$BUILD_NUMBER

# readinessProbe:

# httpGet:

# path: /actuator/health

# port: 8080

# timeoutSeconds: 10

# failureThreshold: 30

# periodSeconds: 5

imagePullPolicy: Always

name: app

ports:

- containerPort: 8080

protocol: TCP

resources:

limits:

cpu: 300m

memory: 600Mi

requests:

cpu: 50m

memory: 180Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

terminationGracePeriodSeconds: 30

---

apiVersion: v1

kind: Service

metadata:

labels:

app: psedu-gateway

name: psedu-gateway

namespace: dangxiao

spec:

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: psedu-gateway

sessionAffinity: None

type: ClusterIPJenkinsfile

pipeline {

agent {

node {

label 'maven'

}

}

stages {

stage('clone') {

agent none

steps {

container('maven') {

git(url: 'https://gitee.com/mingyuefusu/party-school-training-system.git', credentialsId: 'gitee-mingyue', branch: 'master', changelog: true, poll: false)

sh 'ls'

}

}

}

stage('build') {

agent none

steps {

container('maven') {

sh 'mvn clean package -Dmaven.test.skip=true'

}

}

}

stage('default-2') {

parallel {

stage('构建psedu-gateway镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-gateway/target'

sh 'docker build -t hospital-manage:latest -f psedu-gateway/Dockerfile ./psedu-gateway/'

}

}

}

}

}

stage('default-3') {

parallel {

stage('推送psedu-gateway镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-gateway:latest $REGISTRY/$DOCKERHUB_NAMESPACE/hospital-manage:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-gateway:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

}

}

stage('default-4') {

parallel {

stage('psedu-gateway - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-gateway/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

}

}

}

environment {

DOCKER_CREDENTIAL_ID = 'dockerhub-id'

GITHUB_CREDENTIAL_ID = 'github-id'

KUBECONFIG_CREDENTIAL_ID = 'demo-kubeconfig'

REGISTRY = 'registry.cn-qingdao.aliyuncs.com'

DOCKERHUB_NAMESPACE = 'ming_k8s_test'

GITHUB_ACCOUNT = 'kubesphere'

APP_NAME = 'devops-java-sample'

ALIYUNHUB_NAMESPACE = 'ming_k8s_test'

}

parameters {

string(name: 'TAG_NAME', defaultValue: '', description: '')

}

}ALL Jen

pipeline {

agent {

node {

label 'maven'

}

}

stages {

stage('clone') {

agent none

steps {

container('maven') {

git(url: 'https://gitee.com/mingyuefusu/party-school-training-system.git', credentialsId: 'gitee-mingyue', branch: 'master', changelog: true, poll: false)

sh 'ls'

}

}

}

stage('build') {

agent none

steps {

container('maven') {

sh 'mvn clean package -Dmaven.test.skip=true'

}

}

}

stage('打包镜像') {

parallel {

stage('构建psedu-gateway镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-gateway/target'

sh 'docker build -t psedu-gateway:latest -f psedu-gateway/Dockerfile ./psedu-gateway/'

}

}

}

stage('构建psedu-auth镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-gateway/target'

sh 'docker build -t psedu-auth:latest -f psedu-auth/Dockerfile ./psedu-auth/'

}

}

}

stage('构建psedu-base镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-modules/psedu-base/target'

sh 'docker build -t psedu-base:latest -f psedu-modules/psedu-base/Dockerfile ./psedu-modules/psedu-base/'

}

}

}

stage('构建psedu-exam镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-modules/psedu-exam/target'

sh 'docker build -t psedu-exam:latest -f psedu-modules/psedu-exam/Dockerfile ./psedu-modules/psedu-exam/'

}

}

}

stage('构建psedu-file镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-modules/psedu-file/target'

sh 'docker build -t psedu-file:latest -f psedu-modules/psedu-file/Dockerfile ./psedu-modules/psedu-file/'

}

}

}

stage('构建psedu-gen镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-modules/psedu-gen/target'

sh 'docker build -t psedu-gen:latest -f psedu-modules/psedu-gen/Dockerfile ./psedu-modules/psedu-gen/'

}

}

}

stage('构建psedu-job镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-modules/psedu-job/target'

sh 'docker build -t psedu-job:latest -f psedu-modules/psedu-job/Dockerfile ./psedu-modules/psedu-job/'

}

}

}

stage('构建psedu-system镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-modules/psedu-system/target'

sh 'docker build -t psedu-system:latest -f psedu-modules/psedu-system/Dockerfile ./psedu-modules/psedu-system/'

}

}

}

stage('构建psedu-monitor镜像') {

agent none

steps {

container('maven') {

sh 'ls psedu-visual/psedu-monitor/target'

sh 'docker build -t psedu-monitor:latest -f psedu-visual/psedu-monitor/Dockerfile ./psedu-visual/psedu-monitor/'

}

}

}

}

}

stage('推送镜像') {

parallel {

stage('推送psedu-gateway镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-gateway:latest $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-gateway:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-gateway:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

stage('推送psedu-auth镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-auth:latest $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-auth:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-auth:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

stage('推送psedu-base镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-base:latest $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-base:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-base:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

stage('推送psedu-exam镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-exam:latest $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-exam:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-exam:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

stage('推送psedu-file镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-file:latest $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-file:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-file:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

stage('推送psedu-gen镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-gen:latest $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-gen:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-gen:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

stage('推送psedu-job镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-job:latest $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-job:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-job:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

stage('推送psedu-system镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-system:latest $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-system:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-system:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

stage('推送psedu-monitor镜像') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'aliyun-docker-registry' ,usernameVariable : 'DOCKER_USER_VAR' ,passwordVariable : 'DOCKER_PWD_VAR' ,)]) {

sh 'echo "$DOCKER_PWD_VAR" | docker login $REGISTRY -u "$DOCKER_USER_VAR" --password-stdin'

sh 'docker tag psedu-monitor:latest $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-monitor:SNAPSHOT-$BUILD_NUMBER'

sh 'docker push $REGISTRY/$DOCKERHUB_NAMESPACE/psedu-monitor:SNAPSHOT-$BUILD_NUMBER'

}

}

}

}

}

}

stage('部署prod') {

parallel {

stage('psedu-gateway - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-gateway/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

stage('psedu-auth - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-auth/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

stage('psedu-base - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-modules/psedu-base/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

stage('psedu-exam - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-modules/psedu-exam/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

stage('psedu-file - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-modules/psedu-file/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

stage('psedu-gen - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-modules/psedu-gen/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

stage('psedu-job - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-modules/psedu-job/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

stage('psedu-system - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-modules/psedu-system/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

stage('psedu-monitor - 部署到prod环境') {

agent none

steps {

kubernetesDeploy(configs: 'psedu-visual/psedu-monitor/deploy/**', enableConfigSubstitution: true, kubeconfigId: "$KUBECONFIG_CREDENTIAL_ID")

}

}

}

}

}

environment {

DOCKER_CREDENTIAL_ID = 'dockerhub-id'

GITHUB_CREDENTIAL_ID = 'github-id'

KUBECONFIG_CREDENTIAL_ID = 'demo-kubeconfig'

REGISTRY = 'registry.cn-qingdao.aliyuncs.com'

DOCKERHUB_NAMESPACE = 'ming_k8s_test'

GITHUB_ACCOUNT = 'kubesphere'

APP_NAME = 'devops-java-sample'

ALIYUNHUB_NAMESPACE = 'ming_k8s_test'

}

parameters {

string(name: 'TAG_NAME', defaultValue: '', description: '')

}

}ingress

kubectl apply -f ingress.yaml

kubectl get ingress -A

暴露ingress nodeport端口

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: web-ingress

namespace: dangxiao

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: mingyuetest.cn

http:

paths:

- path: /

backend:

serviceName: psedu-admin-front

servicePort: 80 #service的端口servicePort: 80 #service的端口

- path: /prod-api

backend:

serviceName: psedu-gateway

servicePort: 8080安装

kubectl apply -f ingress-controller.yaml

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-configuration

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "<election-id>-<ingress-class>"

# Here: "<ingress-controller-leader>-<nginx>"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

hostNetwork: true

serviceAccountName: nginx-ingress-serviceaccount

containers:

- name: nginx-ingress-controller

image: siriuszg/nginx-ingress-controller:0.20.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

---

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx

namespace: ingress-nginx

spec:

#type: NodePort

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

- name: https

port: 443

targetPort: 443

protocol: TCP

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx